We feel like 2025 is where Load Network found its feet. Rebranding from WeaveVM, we moved away from being just EVM for Arweave and realized what the combination of EVM compute, HyperBEAM devices, ao compute, and permanent storage really unlocked: an onchain data center that solved the rigidity of web3 and centralization of web2.

We’re closing out 2025 proud of what we shipped and the weird new surface area we stumbled on. We went into fair launch mode, brought a new Arweave-adjacent temporary storage layer to market, and polished the Load L1 ready for mainnet.

In this letter we’ll look at the highlights of 2025’s ships at Load Network.

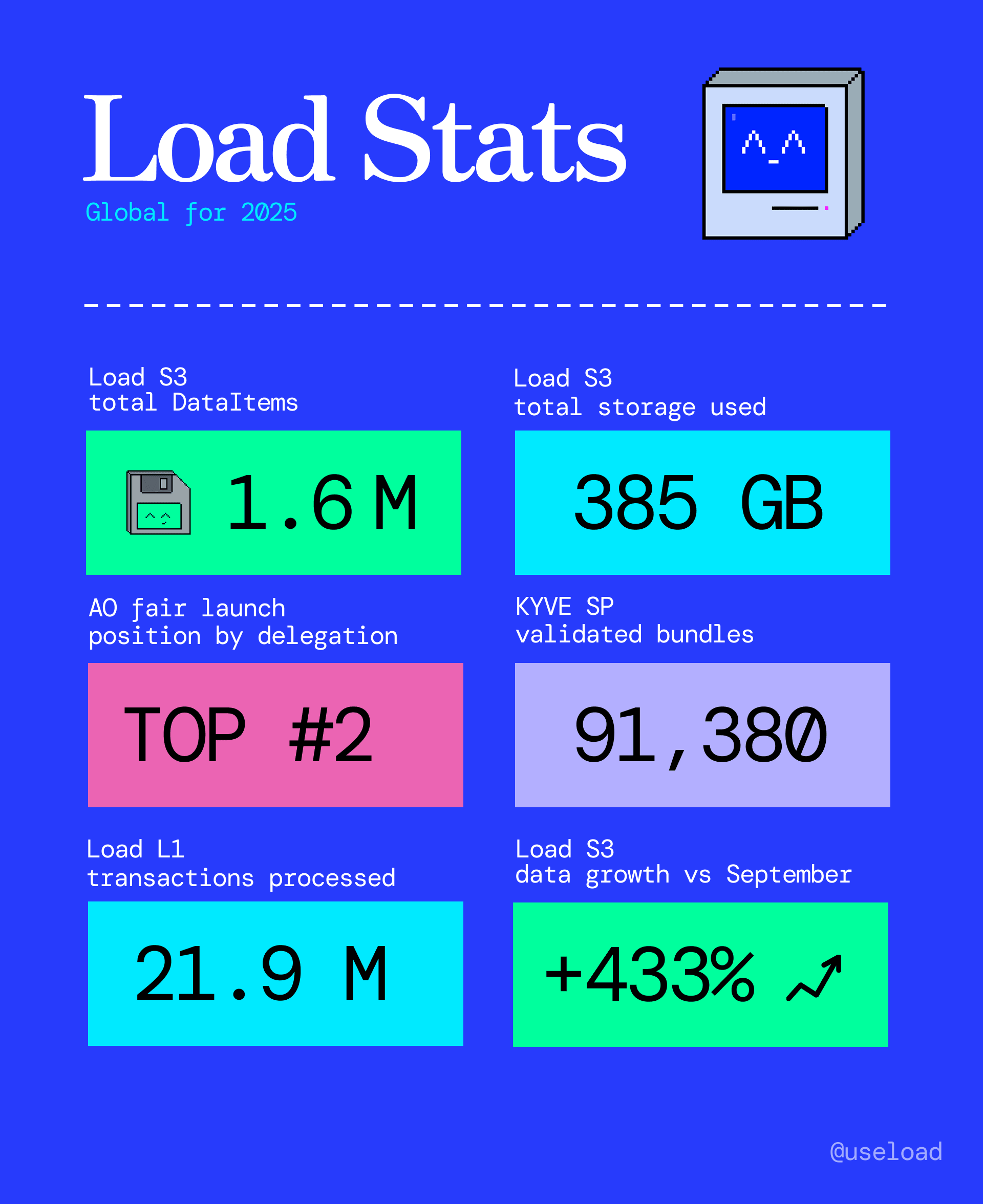

But first, here are some top-level metrics for the year across Load S3 and the Load EVM L1:

The Load Network brand

One of the most obvious changes in 2025 is that we are no longer WeaveVM.

From a practical perspective, the WeaveVM name was a limitation. We were being confused for Arweave itself, and had outgrown the original mission of providing permanent storage for EVM chains. Load Network sounds a lot more like something which could call itself the onchain data center.

On the aesthetic front, we moved towards a more distinctive language inspired by the golden age of operating systems and giving the brand more character. WeaveVM’s design was hyperserious and unrelatable – Load needed to be fun and memorable without lowering itself to memedom. We wrote a design case study showing behind the scenes of the Load rebrand here.

The Load Network fair launch

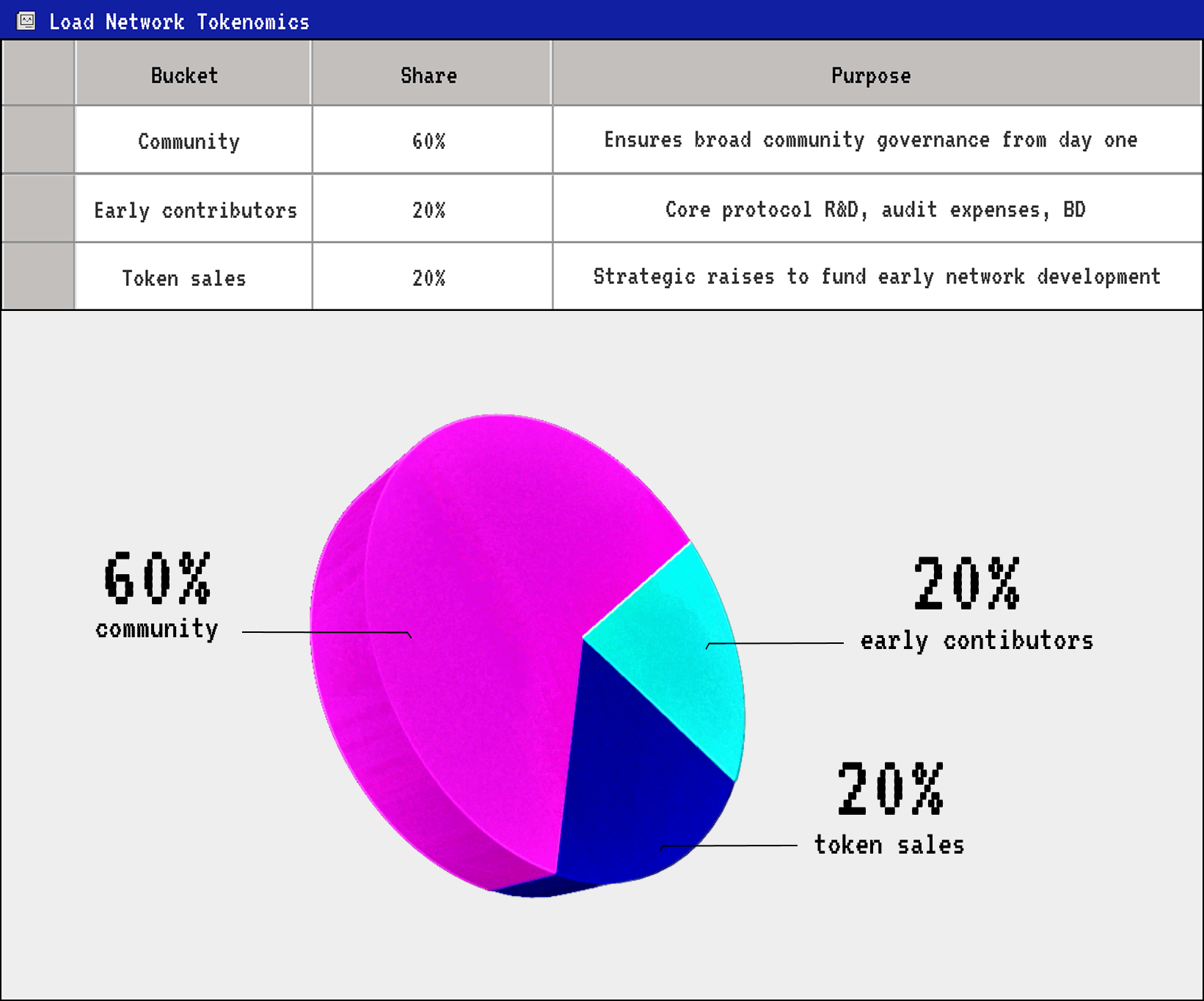

In June we announced the Load Network fair launch, tokenomics, token utility, and next steps towards mainnet.

We allocated 60% of $LOAD to the community as a testament that fair launches patch bugs with fundraising and distribution. Since inception, Load has grown to the #2 most popular project in the AO fair launch, with broader distributions to early supporters from the first day of launch than could be achieved with pure VC funding.

The onchain data center

This year Load formed its vision of the onchain data center: a cloud for compute and storage with the flexibility of web2 giants like GCP and AWS, and the properties of blockchain: cryptographic proof, permanenence, permissionlessness.

We believe this can only be realized with the combination of smart contracts (Load L1), AO processes (HyperBEAM), temporary storage (Load S3), and permanence (Arweave).

We shipped the compute engines: a high-bandwidth EVM L1 natively integrated with both Arweave and Load S3. And a stack of HyperBEAM devices on Load’s own bare metal, powering AO (WASM/Lua) and GPU-based compute.

We also proved the temporary -> permanent data pipeline thesis; projects like KYVE and Apus chose Load S3 + Turbo to power flexible storage for backends and the app layer.

Load Cloud V2 shipped as a user-friendly way to access this storage pipeline and generate access keys. Read more about the vision here.

Simpler ways to get data onchain: load0

In April we shipped load0, which is basically “Large Bundles on steroids,” with a cloud-like UX surface backed by eventual onchain permanence.

We wanted to make onchain storage feel like a web2 service, so we wrapped Load L1 calldata storage in a simple API and made it optimistic with a centralized caching layer. Looking back, this is an early proto-version of the s3-agent -> Arweave via ANS-104 DataItems model we’d go onto develop later in the year.

A nice sign of product market fit for this landed later in the year when Fractals integrated load0 for immutable DePIN marketplace metadata, removing the long-term operational cost of hosting metadata in centralized storage.

DeFi primitives for Load

In our fully Loaded DeFi announcement, we shared the financial building blocks every EVM chain needs (even one focused on storage).

We announced:

- tapestry.fi: a Uniswap-based DEX built on Load’s 500 mgas/s, positioned for a high-performance trading experience with 1 second finality. Every chain needs one.

- bridge.load.network: a bidirectional bridge between Load and Ethereum, powered by Hyperlane.

- USDL with Astro Labs: backed by USDC, USDT, and DAI and explicitly designed so yield can be diverted to incentivize pools/protocols/validators. USDL will power a growth flywheel tied to the network’s data economy

And later in the year, Load’s DeFi-for-storage angle got stronger: Nau Finance launched GiB, a stablecoin pegged to the cost of 1 GiB of storage on Arweave, minted by depositing AR as collateral, i.e., predictable storage costs expressed as a unit of storage, not fiat.

High-value integrations for storage, DA, and AI

Load’s ecosystem grew substantially in 2025, with partnerships with Blobscan, Dymension, Humanode, Mask, RSS3, dHealth, Apus and KYVE.

Our sense that Load will solve the problem of chain data expiry was right. Load was integrated for permanent blobs in Dymension’s RollApps as an altDA provider, by Blobscan as a permanent alternative to Google storage, and by KYVE as a way for chains to cheaply dump ledger data and selectively make it permanent on Arweave via Load S3.

We found that the AI use case suited Load S3’s flexibility – Apus integrated Load S3 to store AI attestations and prompts, whereas dHealth added Load S3 as a way to store patient healthcare records for AI analysis.

The early evolution of Load S3

A big chunk of the year was making S3 a first-class interface to permanence. We encapsulated a rock solid S3 client inside a HyperBEAM device, giving the world’s most popular storage standard permanent rails, cryptographic integrity, and a suite of tools that both developers and non-techs could use to store data on HyperBEAM.

We partnered with Turbo and moved towards a model where the S3 API can post directly using Turbo + ANS-104 dataitems, then made dataitems the default via the s3-agent REST API.

In August we spun the initial PoC out to our own bare metal services and started offering the ~s3@1.0 device at scale. We also prepared to turn it into an AO-powered network; operators can either run MinIO clusters colocated with HyperBEAM nodes or connect existing external clusters.

A permissionless auth layer

In October, Load S3 reached its first openness stage: you no longer needed to DM for access keys. Keys (load_acc) are generated self-serve via cloud.load.network, and enable access to Load S3 via the SDK, s3-agent, and the loaded-turbo upload service.

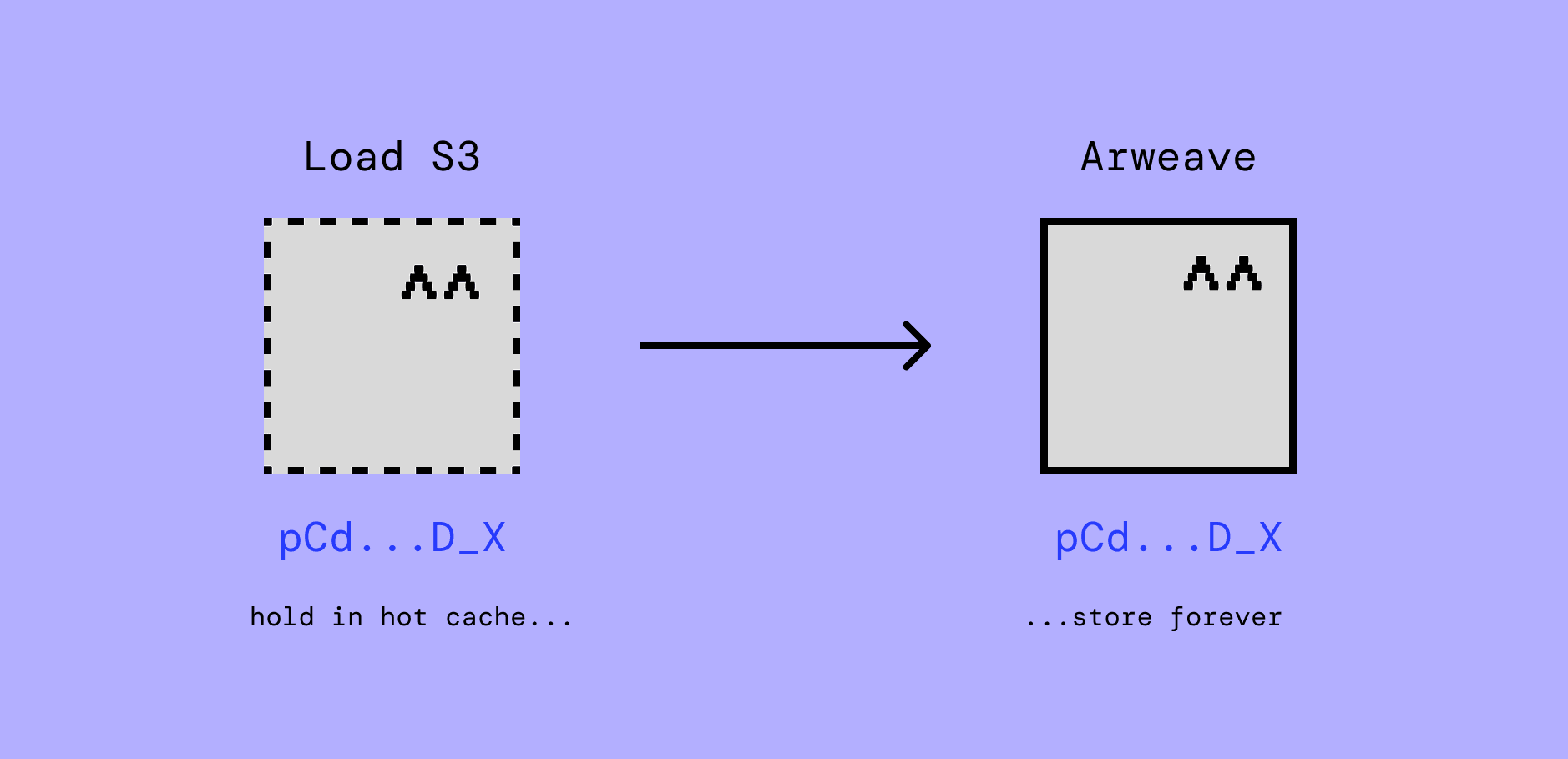

The xANS-104 paradigm

In September we coined the thing we’d been building towards: xANS-104.

That is, technically: an ANS-104 DataItem that is first stored temporarily on Load S3, then can transition either to deletion or to permanent storage on Arweave, without losing determinism or provenance.

What this practically enables is applications which make decisions about whether data should or should not be permanent, without having to know at time of upload. Like we wrote about in our data agents post, it’s not always obvious what data will become valuable but systems shouldn’t have to change the way they find that data just because its robustness increased.

ANS-104 dataitems can be created offchain, their IDs are deterministic so don’t change no matter whether they are on Load S3 or Arweave, and they are inherently tamper-proof. With this model, we found the format we’ll be defaulting to.

x402 + 402104

In October we shipped monetization for uploaders at the S3 object level: paywalls for ANS-104 DataItems. We wanted to make it possible for anyone to upload data to Load S3 and charge tokens for it – we added the feature to the Load Cloud UI and built a backend API for it, which powered 402104 – a way for recipients to pay USDC to unlock a given DataItem.

Then we took it further with the ~x402@1.0 HyperBEAM facilitator and hyper-x402, adding $AO token support (breaking free of the Coinbase eco!)

A quest to HyperBEAM the EVM (and alien compute)

Given Load’s final form as a hybrid AO-EVM contraption, we’re building the EVM’s component stack into modular devices on HyperBEAM. This includes the Helios light client, EVM runtimes and even RISC-V. We’re building towards a vision of Load mainnet where either end of the network – EVM, or AO – can be the trigger or action for compute.

We also published a post (Load is the Home Planet for Alien Compute) that tied together the strange codebases (RISC-V EVMs, GPU compute layers, HyperBEAM runtimes) and positioned Load as the base layer for execution environments that don’t fit traditional onchain constraints.

Alphanet -> Fibernet

After several less-than-stable testnet iterations, Alphanet V5 landed as a production-grade testnet that dApps can feel safe to deploy to. V5 launched in May and has remained a solid foundation for dApps like Tapestry and Relic, and is used in production for chains like Metis and Dymension.

From deliberately stable to deliberately unstable (and back again), we announced Fibernet in November as a new testnet built to validate improvements that aren’t possible to test safely on Alphanet. After testing, the network will form the 1:1 basis for Load’s mainnet release.

Fibernet is experimental by design. It will change rapidly, fork, and undergo aggressive stress tests. It’s the performance lab for Load - the environment where we push the system to its limits before introducing new capabilities to the stable network.

Alphanet V5 will continue to operate for dApp development, ledger archiving and altDA. Fibernet runs alongside it with a different purpose: to validate whether Load’s architecture scales the way it needs to.

And it ties access to the fair launch economics: Fibernet will be initially access-gated to Load Fair Launch participants.

Towards 2026

Coming into 2025, we were keen to shake misconceptions that Load was just another EVM chain and wanted to show how permanence + unbounded compute could turn that into a next-generation onchain data center.

We focused on making onchain storage a smart default (not just a pain in the arse), and unlocking new powerful compute possibilities via HyperBEAM.

Just as vital, we laid the foundation for mainnet so that 2026 can take what’s experimental and make it stable.

LFG UNLEASH FIBERNET (`・ω・´)

LFG $LOAD UNLOCK ヽ(´▽`)ノ

LFG YET MORE HYPERBEAM (ノ◕ヮ◕)ノ*:・゚✧