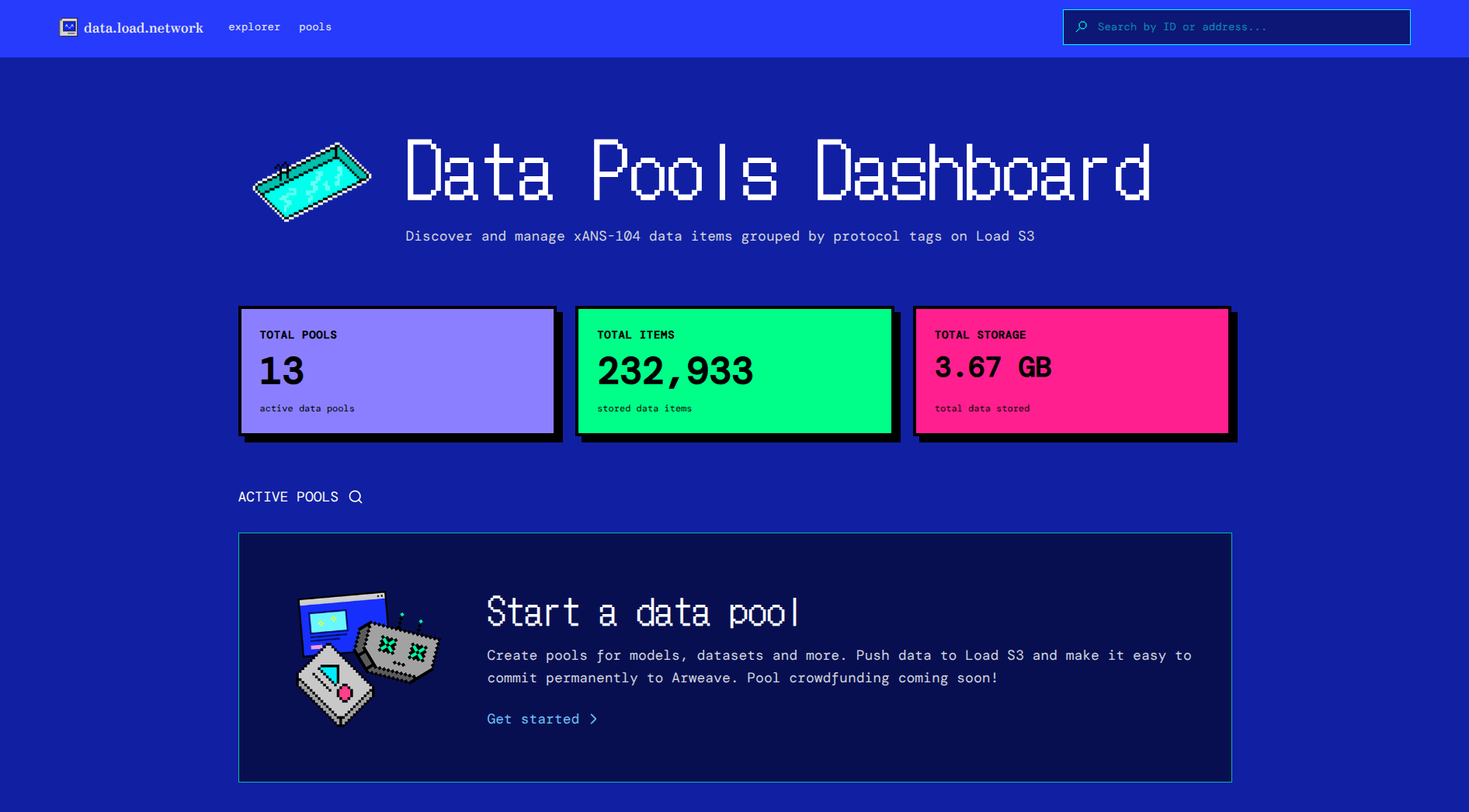

When we launched the Load S3 explorer last week, you might have noticed a new feature: Pools.

Pools is a way to store complete datasets on Load S3 to make them easy to query and able to be collaboratively pushed to Arweave.

The emergence of AI has triggered an explosion in the amount of data online. Hugging Face alone hosts over 700,000 datasets with a total size of well over 12PB by today’s estimates.

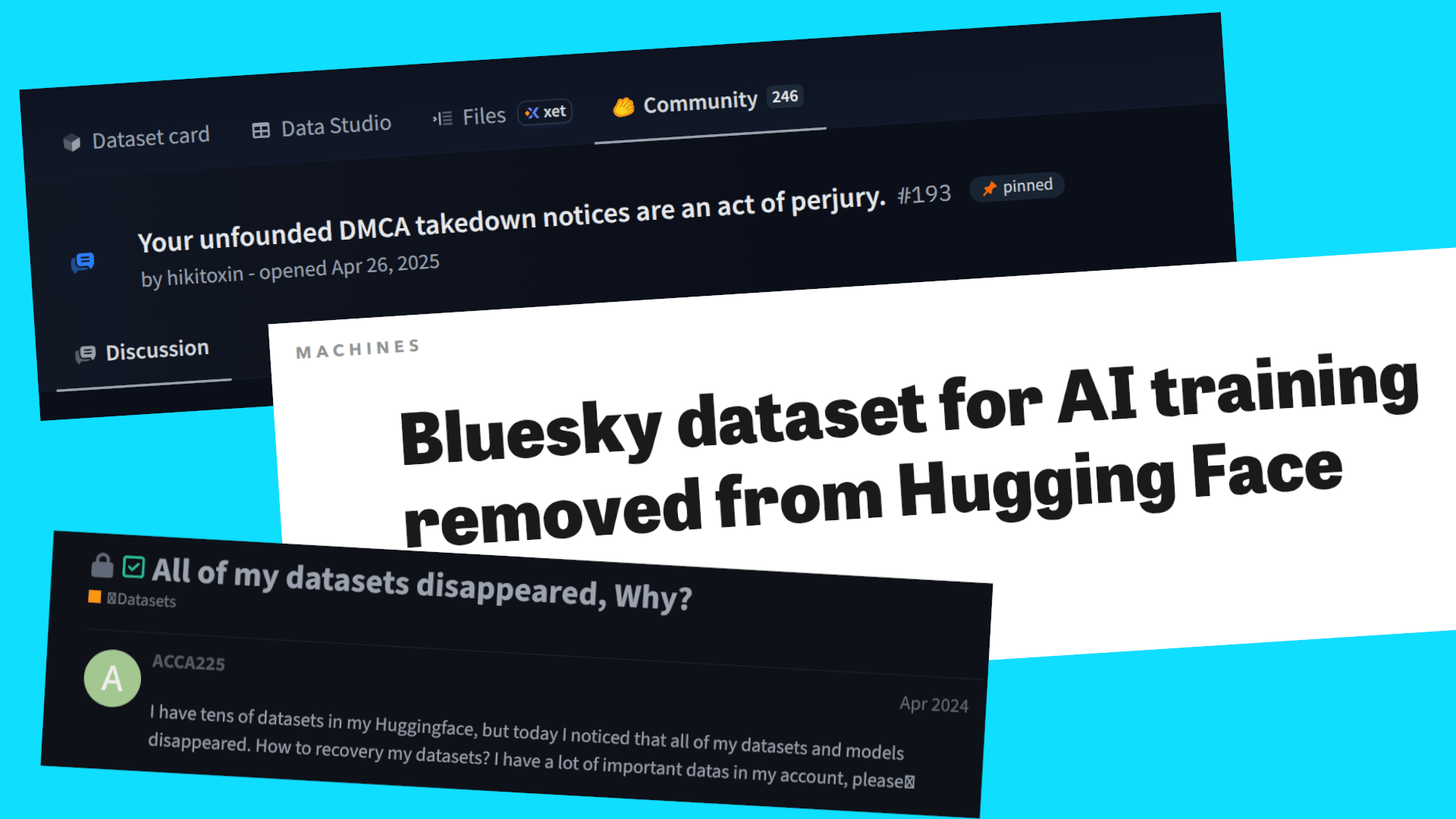

It would make sense for such vital and widely used data to be immutable and permanent, but it’s not quite the case.

Despite their importance, most AI datasets today are neither immutable nor permanent. Platforms like Hugging Face operate as mutable Git repositories: dataset owners can overwrite history, remove files, or delete entire sets, while HF must comply with takedowns, policy changes, and legal pressure. This makes modern AI research fragile, especially for reproducable experiments.

The same goes for GitHub, which is just as much of a wild west. Despite version control and revision history, repositories can be made private or deleted either by Microsoft or their owner.

If the data is vital (and it is), it needs proper archival guarantees: verifiable integrity, permanent storage, and cryptographic versioning.

So just dump all of the datasets on Arweave? Well, yes and no. The ultimate goal for AI dataset archivism is permanent, versioned, immutable storage with provenance, but this comes with high upfront costs and UX hurdles. Load Pools, s3-agent, and the xANS-104 paradigm is here to fix that. Let’s see how.

A CLI for uploading tagged datasets to Load S3

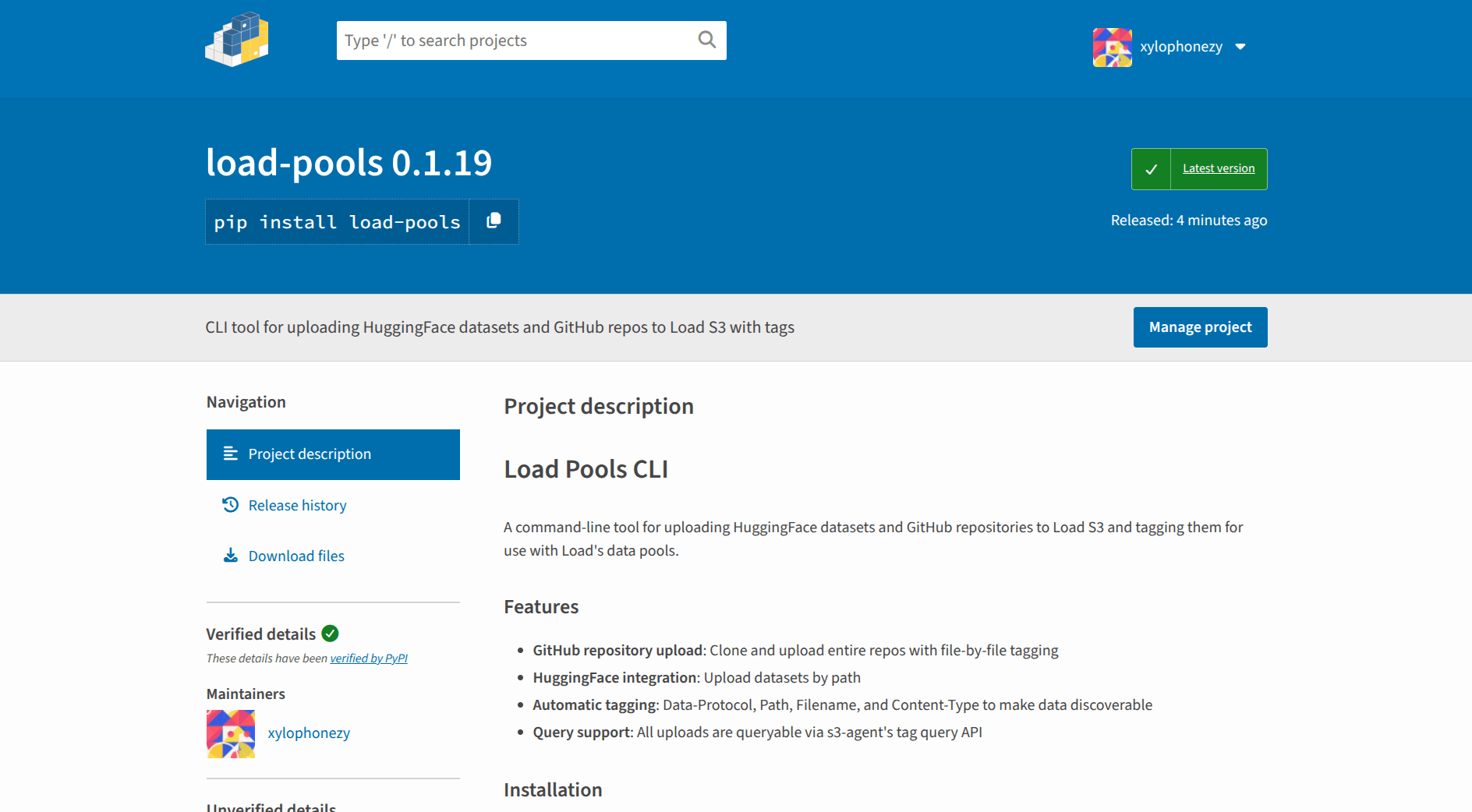

Today we’re shipping load-pools, a Python-based CLI package for pushing entire datasets to Load S3 with support for Hugging Face and GitHub.

After installation:

pip install load-pools

…it’s as easy as:

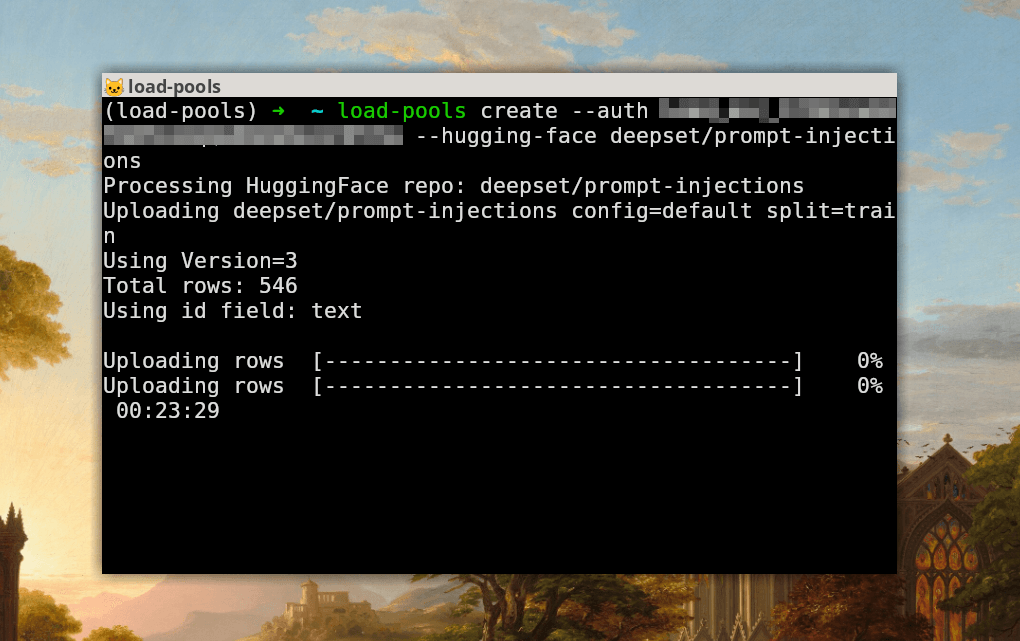

load-pools create --auth <LOAD_ACC_KEY> --hugging-face <DATASET>

You can get a load_acc key at cloud.load.network, and the Hugging Face dataset argument should be, e.g., openai/gdpval, discoverable in the URL of any dataset on the site.

GitHub repositories are uploaded in a similar way, wit: --github <REPO_URL>. Check PyPi for the full docs.

Running this pushes the entire contents of a dataset to Load S3, effectively creating a mirror on Load’s HyperBEAM-powered storage layer.

Where s3-agent and xANS-104 come in

But not just any old mirror. Flowing through the s3-agent, each piece of the dataset is packaged as an ANS-104 dataitem.

ANS-104 guarantees:

- Provable signer

- Content integrity

- Determinstic ID

- Tags built into the data itself

- Immutability

- 1:1 Arweave compatibility

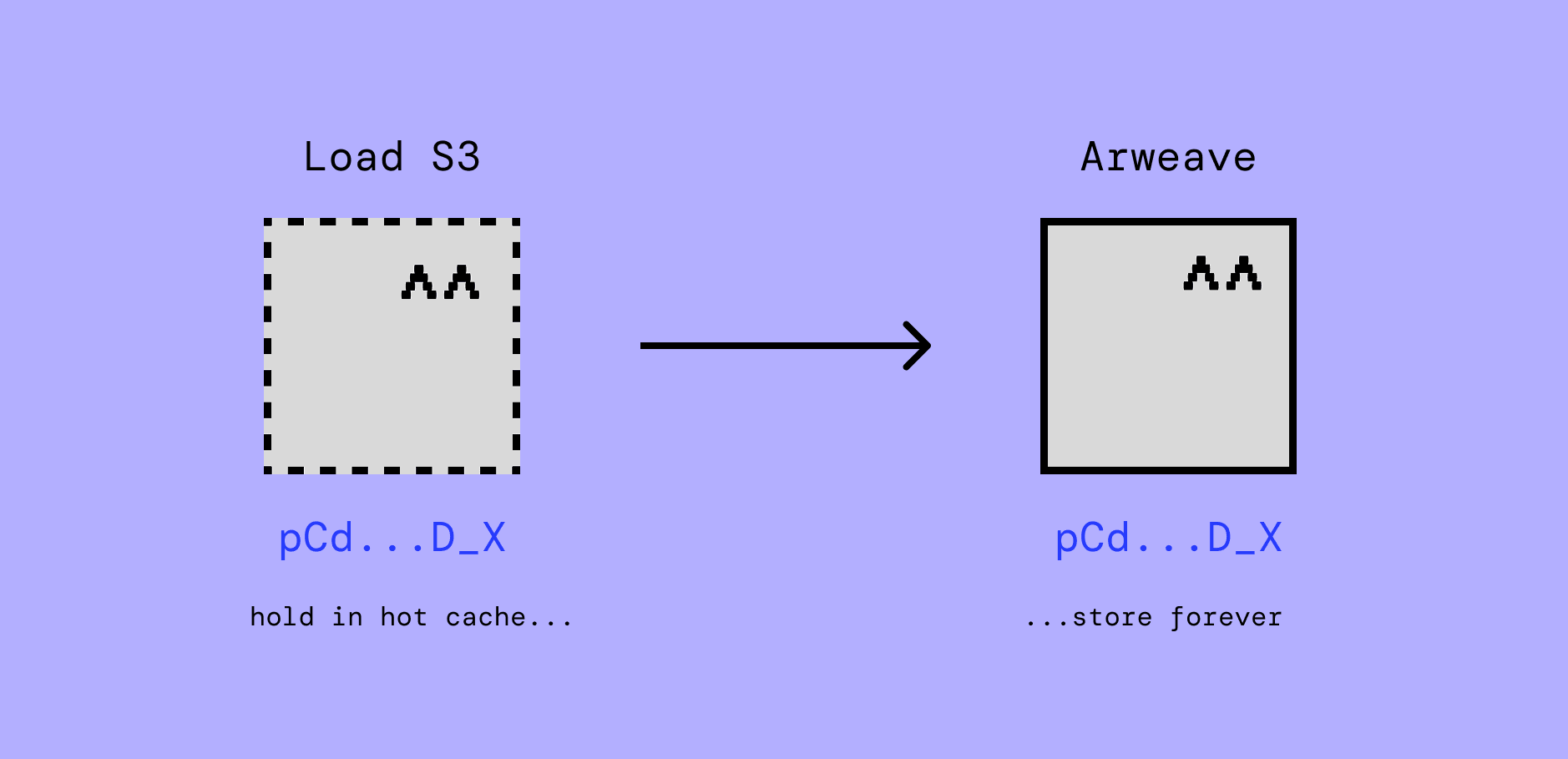

DataItems on Load S3 can be anchored to Arweave as-is by anyone, without losing provenance or changing ID. This is what we call the xANS-104 paradigm:

Uploading data to Load S3 opens the option of gradually committing bits and pieces of the set over time. This is especially helpful for huge datasets that would cost thousands of dollars to make permanent upfront, and even enables crowdfunding.

Crowdfunded permanence

One of the earliest use cases I saw for Arweave was as an immutable snapshot of the whole of Wikipedia. At the time, Arweave’s spin focused on storing all of humanity’s knowledge, and that appealed to me as an archivist. The reason we don’t have something like the entirety of Wikipedia on Arweave is that the cost is too high to front for any one person.

For AI datasets and even something like Wikipedia, Load Pools solves the cold start problem by making it possible to contribute $AR towards making data permanent — transitioning it from temporary Load S3 to forever Arweave.

Crowdfunding is the last missing piece of Load Pools, and next in line to build. For now, you can store any Hugging Face or GitHub data on Load S3 in a format that prepares it for permanence.

Get started with load-pools:

pip install load-pools- Upload your dataset (docs)

- When the upload finishes, check data.load.network/pools to see it on the explorer

If you need the data programatically, use the s3-agent /query endpoint, like this:

curl -X POST https://load-s3-agent.load.network/tags/query \

-H "Content-Type: application/json" \

-d '{"filters": [ {"key":"Data-Protocol","value":"cvjena/labelmefacade"}]}'Stay tuned on X for updates to the project!