Typical S3 storage layers are a black box. Hard to audit, no cryptographic provenance, and high degrees of trust. With Load S3, we’re changing that. Without being a blockchain, Load S3 now has the properties of one.

Just before the new year, we shipped a feature allowing you to upload an S3 object alongside an onchain pointer on Arweave, capturing timestamp. Combine this with the signed DataItem format which proves content integrity and uploader address, and users now have complete audits of the lifecycle of their S3 objects: permanent, tamper-proof, verifiable receipts.

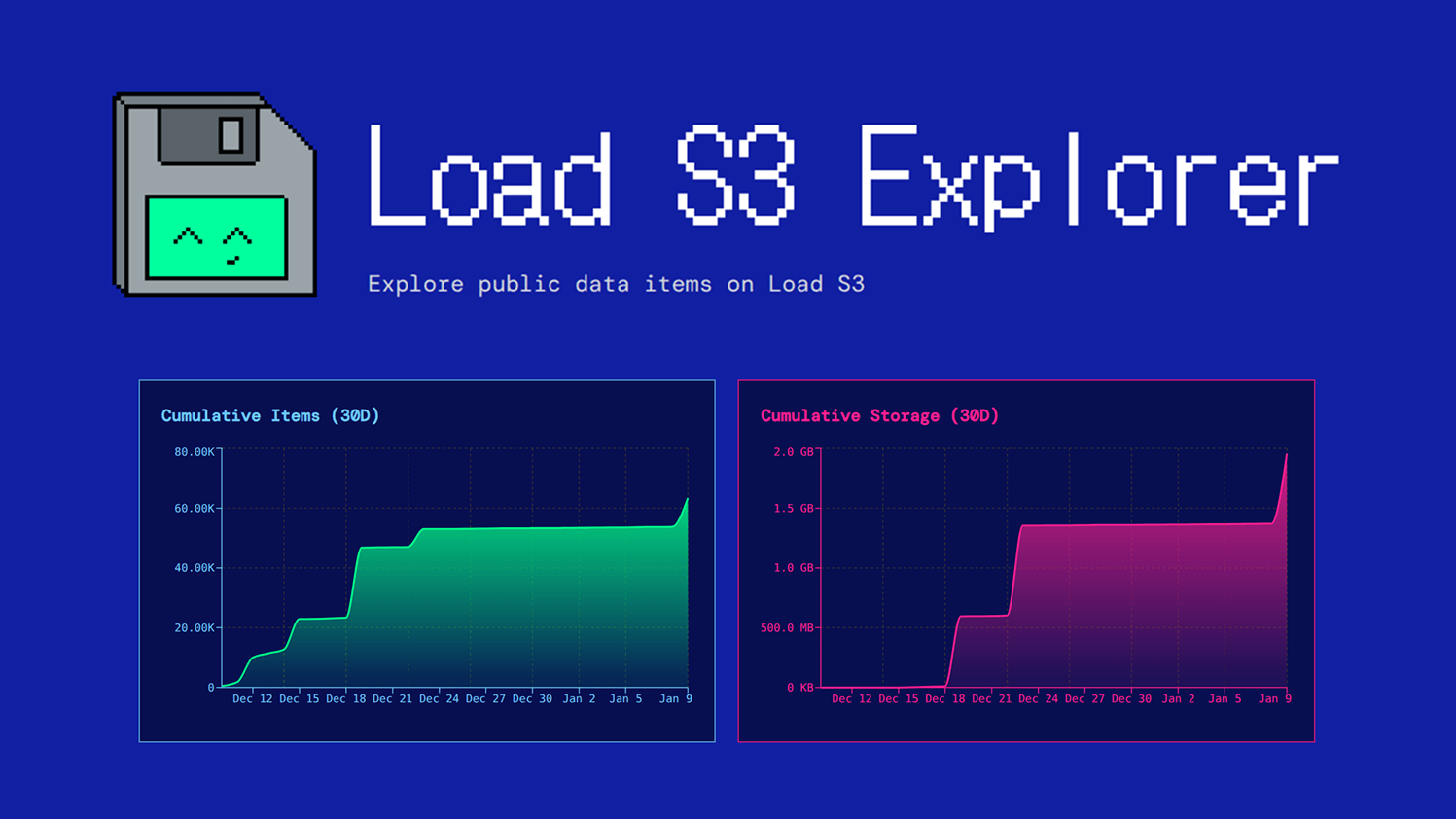

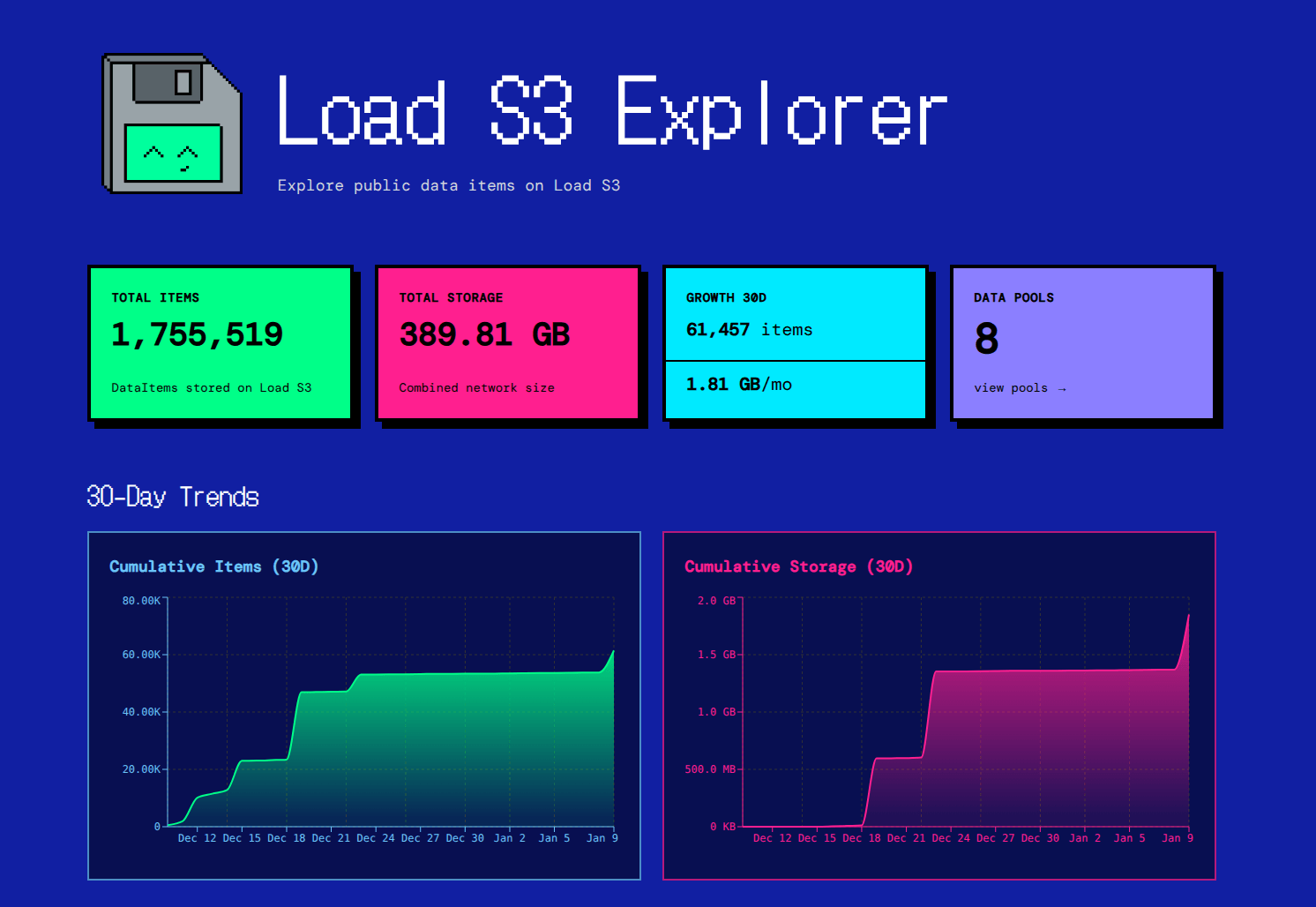

With a full suite of metadata for public offchain DataItems, it makes sense to enable public discoverability. We’ve built a public DataItem explorer and a new pools feature powered by tags classifying data into datasets.

In this post, we’ll go through the features of each.

data.load.network: an explorer for public DataItems

The s3-agent API endpoint has become the defacto way to upload publicly-accessible DataItems to Load S3:

echo -n "hello world" | curl -X POST https://load-s3-agent.load.network/upload \

-H "Authorization: Bearer $load_acc_api_key" \

-F "file=@-;type=text/plain" \

-F "content_type=text/plain"(P.S, get a load_acc key from cloud.load.network)

Uploading data this way makes it public on Load S3, anchorable on Arweave, and discoverable on the explorer.

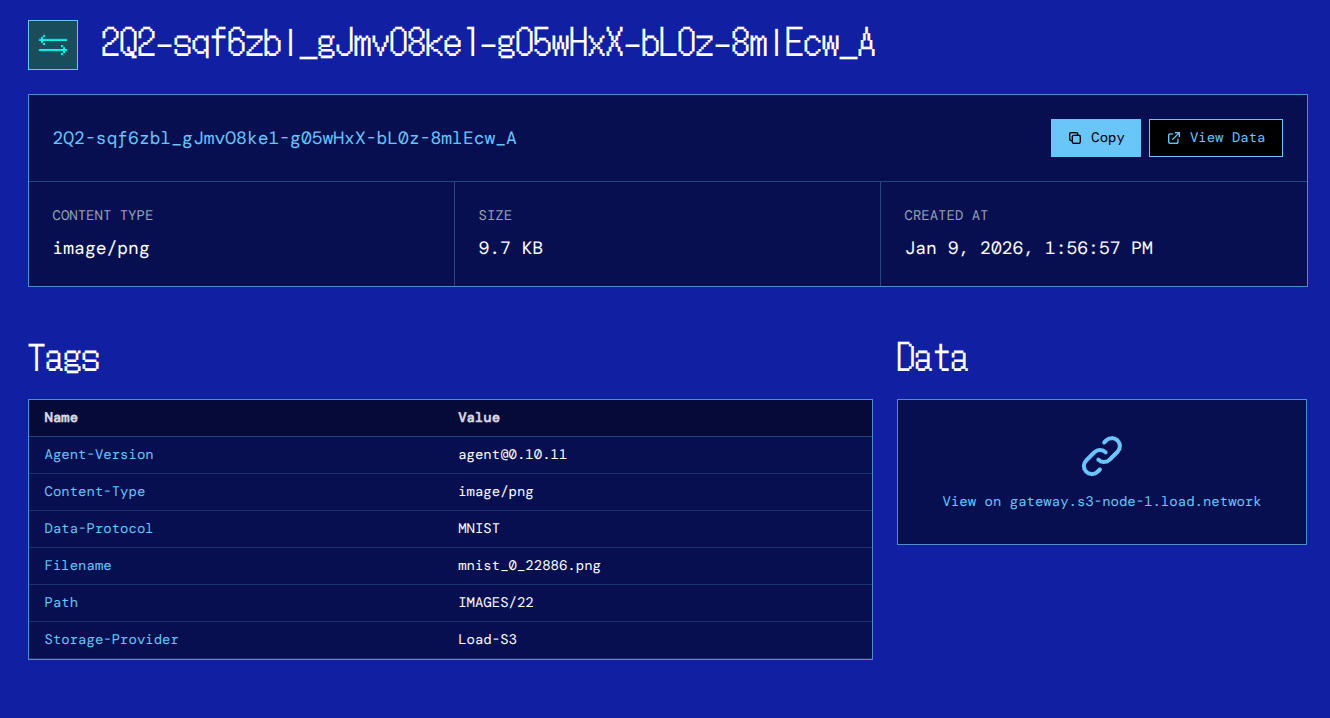

Tags, metadata and a link to the data on the gateway are displayed on the /tx/<id> page.

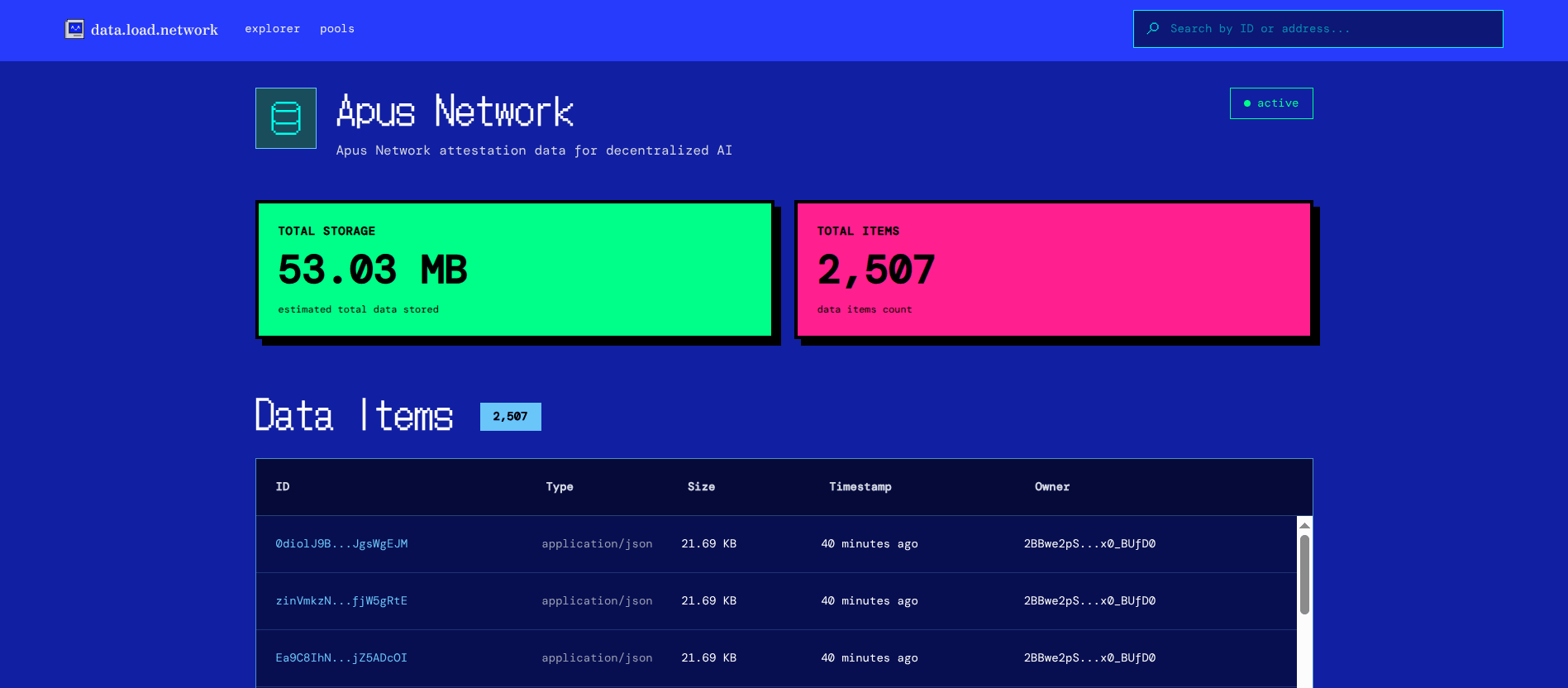

For users like Apus, who implemented Load S3 storage to store AI inference and prompt data, this provides an auditable proof of storage with a direct route through to Arweave permanence (retaining the exact same ID regardless of storage layer).

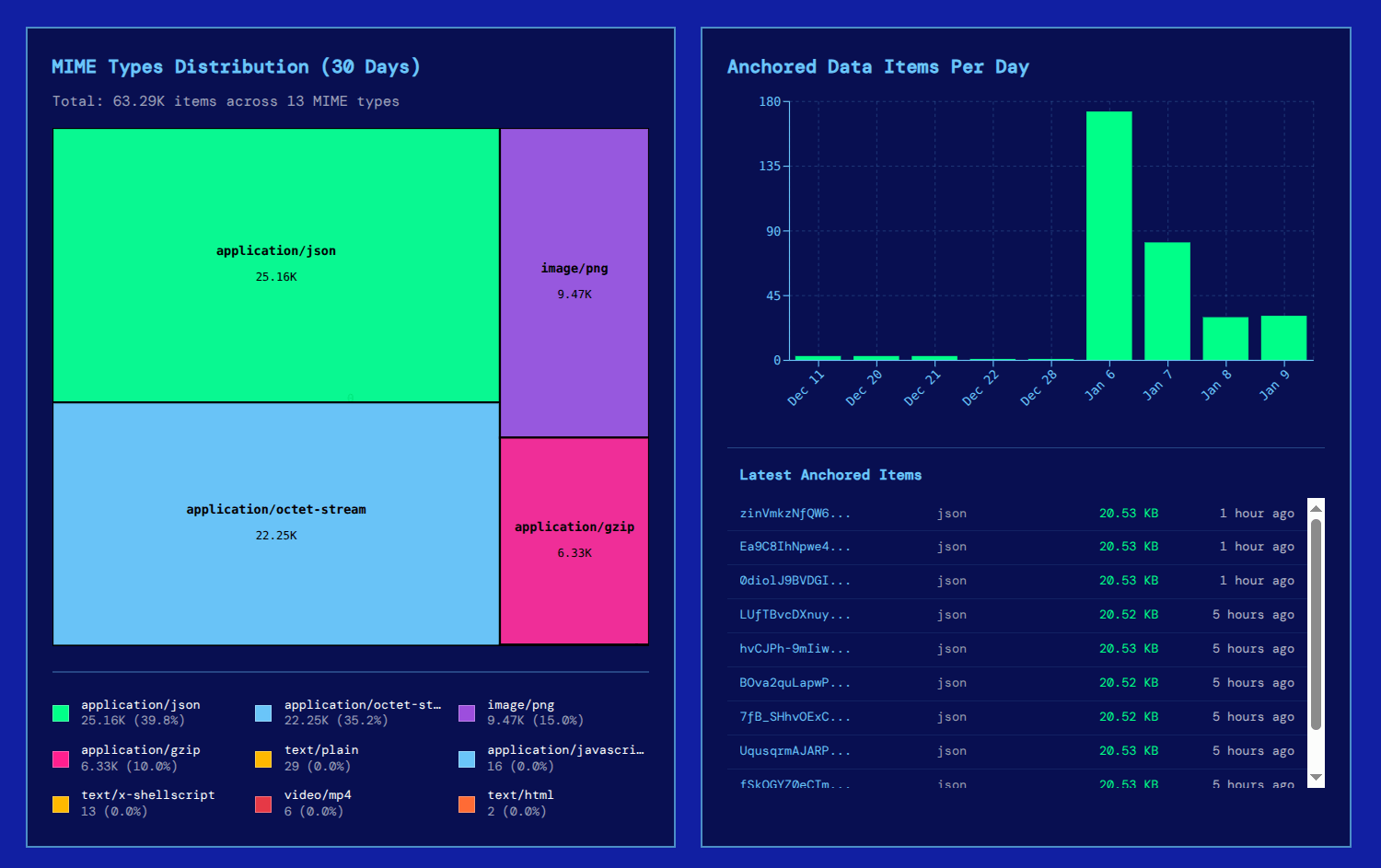

The explorer homepage shows the number of DataItems that flow from Load S3 to Arweave daily, and a feed of the most recent:

In line with xANS-104, we’re thrilled to see Load S3 expanding the blockweave and providing a temporary home for compatible staged data!

How queries work

The explorer is fed by a simple GraphQL-powered query layer packaged as part of the s3-agent API.

curl -X POST https://load-s3-agent.load.network/tags/query \

-H "Content-Type: application/json" \

-d '{

"filters": [

{

"key": "Content-Type",

"value": "text/plain"

}

],

"owner": "2BBwe2pSXn_Tp-q_mHry0Obp88dc7L-eDIWx0_BUfD0",

"full_metadata": true

}'This example query shows you how to build your own tooling around Load S3’s public data items. Refer to the s3-agent docs for more on /query.

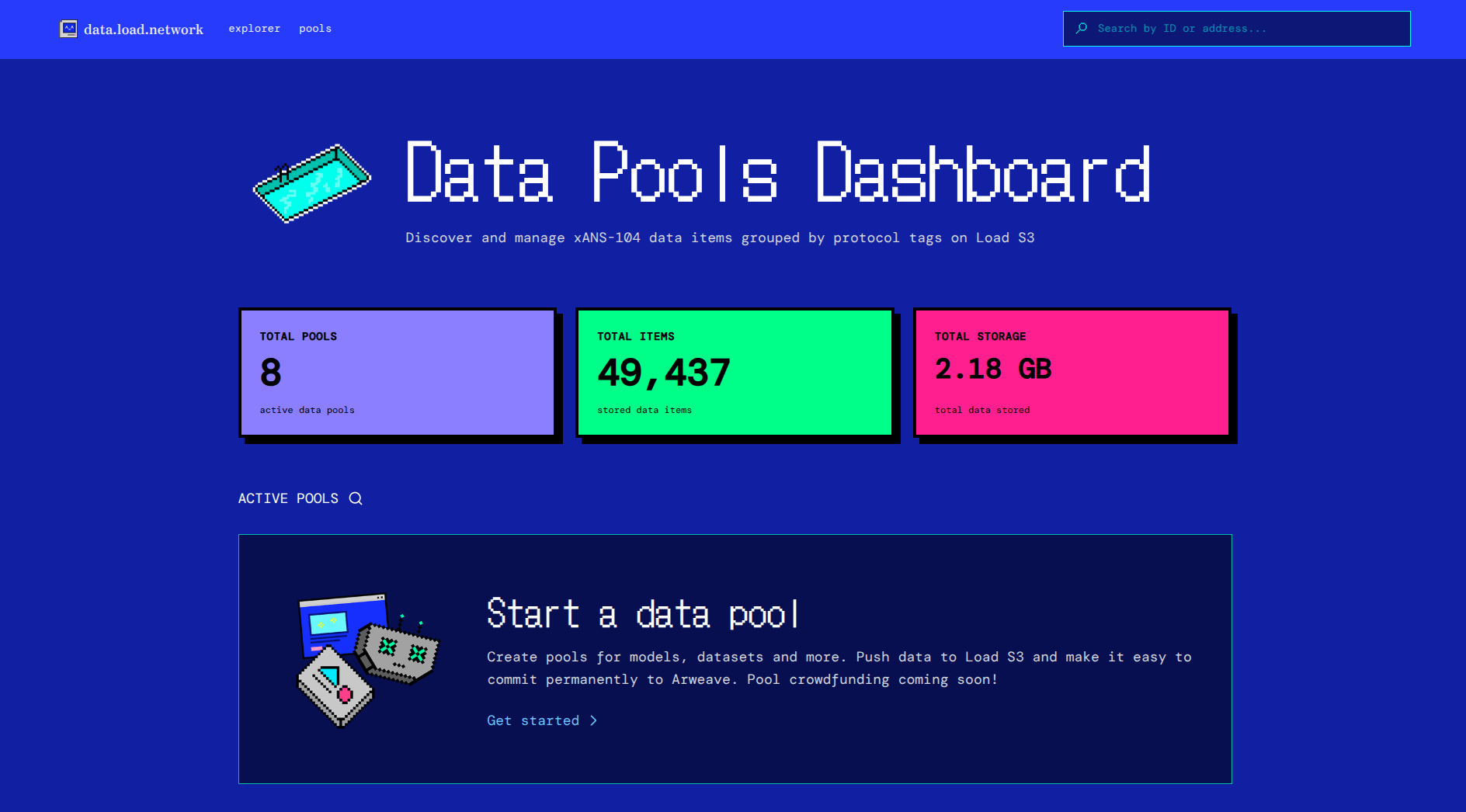

Data Pools

Since s3-agent supports tags (natively by way of the DataItem standard), it’s possible to upload and classify full datasets into pools.

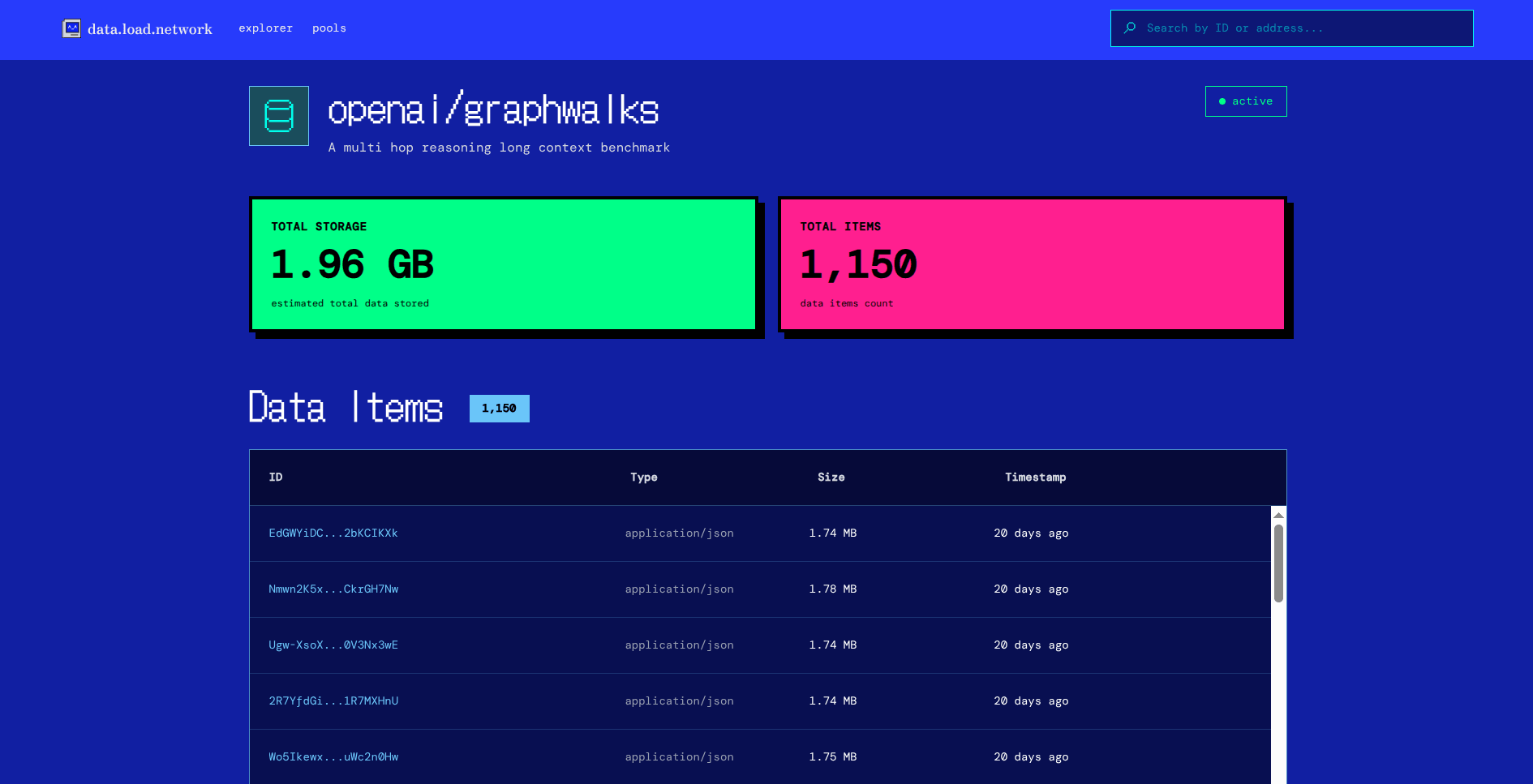

Set a unique tag system like Data-Protocol: openai/graphwalks, and the pools dashboard can index and display it as a dataset.

Where this gets really cool is Load’s links to Arweave and the xANS-104 paradigm. Soon it will be possible to sponsor the upload of staged temporary S3 DataItems to Arweave, pushing all or part of a dataset while retaining its ID and provenance.

We see this being extremely useful as a way to crowdfund the permanence of AI datasets, large media libraries, or even resources like Wikipedia; once the data is on S3, it can be anchored to Arweave in one command.