Load Cloud API keys are now fully compatible with the S3 SDK and able to be generated self-serve via cloud.load.network

Today we are pleased to announce that the HyperBEAM-powered temporary storage layer, Load S3, has reached its first openness stage where it’s no longer required to privately request access keys in order to interact with the storage layer through S3-compatible SDKs & tools. Let’s dive straight into the new release!

load_acc powered LS3

As of today, it’s no longer required to directly contact us to acquire Load S3 access keys to interact with the LS3’s drive.load.network HyperBEAM cluster. Interacting with the Load S3 storage layer now has programmatic access with load_acc access keys and a new cluster endpoint: https://api.load.network/s3 – let’s explore step by step how to do it!

How to get load_acc API keys

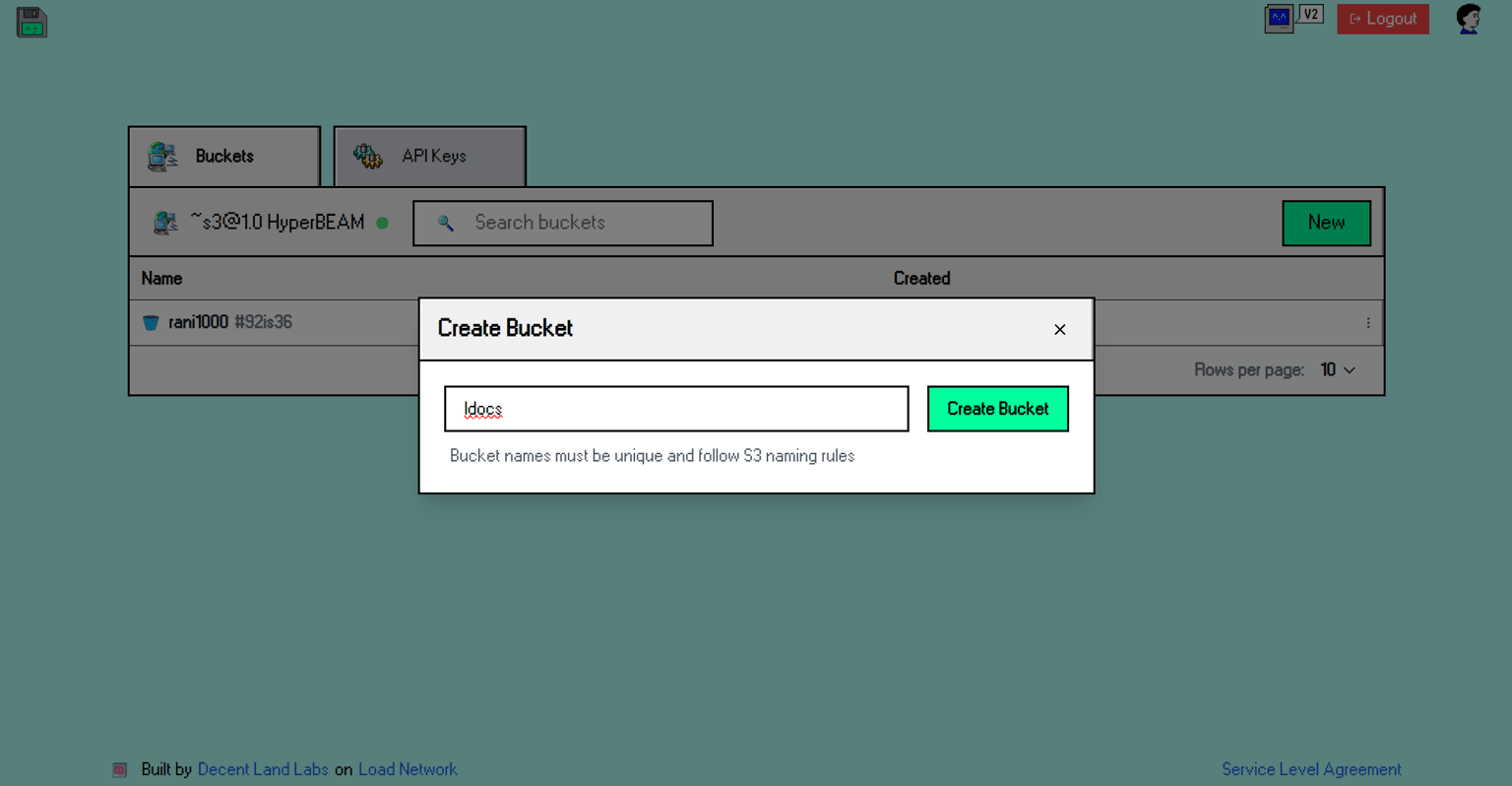

First, you have to create a bucket from the cloud.load.network dashboard and copy the full name it generates.

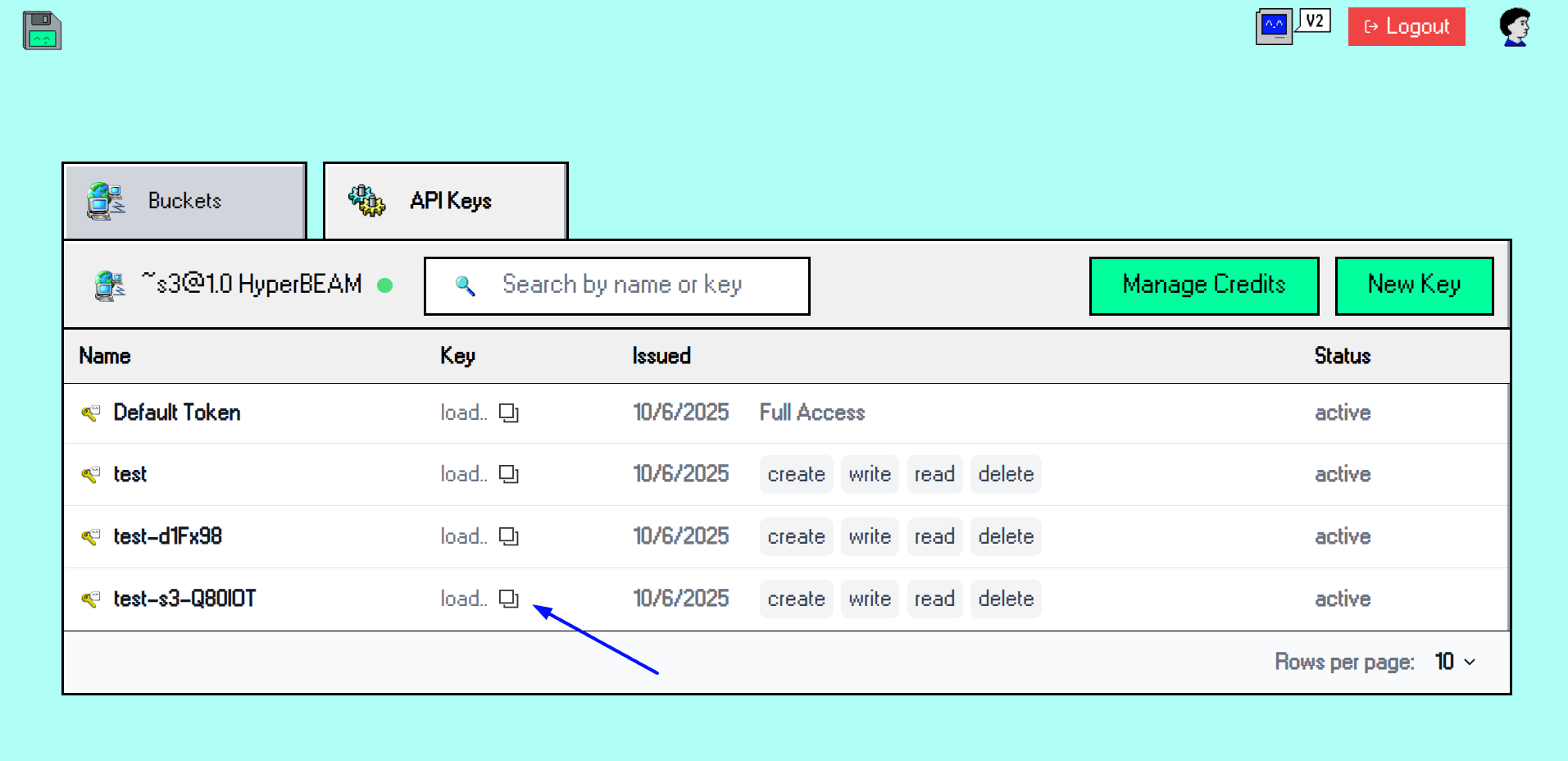

After creating the bucket, navigate to the “API Keys” tab and copy the load_acc for the new bucket.

And that’s it! Now you can programmatically interact with your LCP’s bucket using your favorite S3 SDK, and your load_acc API key!

Note: plain objects created with the AWS S3 SDK will not populate in the cloud.load.network UI because they are not ANS-104 DataItems uploaded with the load-s3-agent. However, they are programmatically accessible with normal S3 methods like getObject!

load_acc in action

After creating a bucket and load_acc API key, you can now interact with the Load S3 storage layer via S3-compatible SDKs, such as the official AWS S3 SDK. After initializing the S3 client with your load_acc key, you can start using it to call the S3 commands you need in order to interact with the HyperBEAM cluster, such PutObjectCommand.

Here’s a full snippet you can copy and customize:

import { S3Client, PutObjectCommand, ListObjectsV2Command, GetObjectCommand } from "@aws-sdk/client-s3";

const endpoint = "https://api.load.network/s3";

const BUCKET_NAME = "" // replace with your bucket

const accessKeyId = "" // load_acc -- grab from cloud.load.network

const secretAccessKey = ""; // keep this blank

const s3Client = new S3Client({

region: "eu-west-2",

endpoint,

credentials: {

accessKeyId,

secretAccessKey,

},

forcePathStyle: true,

});

async function uploadFile(bucketName, fileName, body, expiryDays) {

try {

const command = new PutObjectCommand({

Bucket: bucketName,

Key: fileName,

Body: body,

Metadata: {

'expiry-days': expiryDays.toString()

}

});

const result = await s3Client.send(command);

console.log("Object uploaded:", fileName, "with expiry:", expiryDays);

return result;

} catch (error) {

console.error("Error putting object:", error);

}

}

async function listBucketContents(bucketName) {

try {

const command = new ListObjectsV2Command({

Bucket: bucketName

});

const result = await s3Client.send(command);

console.log("\nBucket contents:");

if (result.Contents && result.Contents.length > 0) {

result.Contents.forEach(obj => {

console.log(`- ${obj.Key} (${obj.Size} bytes, modified: ${obj.LastModified})`);

});

} else {

console.log("Bucket is empty");

}

return result;

} catch (error) {

console.error("Error listing bucket contents:", error);

}

}

async function getLatestObject(bucketName) {

try {

const listCommand = new ListObjectsV2Command({

Bucket: bucketName

});

const listResult = await s3Client.send(listCommand);

if (!listResult.Contents || listResult.Contents.length === 0) {

console.log("No objects in bucket");

return;

}

const latest = listResult.Contents.sort((a, b) => b.LastModified - a.LastModified)[0];

const getCommand = new GetObjectCommand({

Bucket: bucketName,

Key: latest.Key

});

const getResult = await s3Client.send(getCommand);

const content = await getResult.Body.transformToString();

console.log(`\nLatest object: ${latest.Key}`);

console.log(`Contents: ${content}`);

return content;

} catch (error) {

console.error("Error getting latest object:", error);

}

}

(async () => {

const fileName = `test-${Date.now()}.txt`;

await uploadFile(BUCKET_NAME, fileName, new TextEncoder().encode("hello world from the S3 API demo"), "");

await listBucketContents(BUCKET_NAME);

await getLatestObject(BUCKET_NAME);

})();Uploading data using AWS S3 SDK / Load Agent vs Turbo SDK

On September 24th we released a Turbo-compliant ANS-104 upload service, making it possible to use the official Turbo-SDK as data uploader to LS3. This upload service, along the load-s3-agent, form the main 2 data objects ingress as ANS-104 DataItems.

The main difference between load-s3-agent and Turbo-SDK is access control. Uploading data via Turbo SDK default to the offchain-dataitems data protocol where all uploaded data items sit in the protocol’s public bucket, while using the load s3 agent, it’s possible to upload objects (dataitems) to private buckets and control the access and have expireable shareable download links.

The load-s3-agent abstracts the AWS S3 SDK boilerplate code and makes it super simple to put and get ready-formatted dataitems on Load S3.

It works with your new load_acc key, too:

echo -n "hello world" | curl -X POST https://load-s3-agent.load.network/upload/private \

-H "Authorization: Bearer $load_acc_api_key" \

-H "x-bucket-name: $bucket_name" \

-H "x-dataitem-name: $dataitem_name" \

-H "signed: false" \

-F "file=@-;type=text/plain" \

-F "content_type=text/plain"Check the load-s3-agent docs for more.

Whether you’re using the load-s3-agent or loaded-turbo with the official Turbo SDK n both cases, the outcome is equal: ANS-104 formatted S3 objects along its data provenance guarantees and Arweave alignment.

Load it up

Either using LCP dashboard, load s3 agent, or the Loaded Turbo upload service, you can start using Load S3 with different data standards and guarantees. Either you are interested in Arweave’s ANS-104 data standard or coming from the web2 world and still want to use the S3 standard, LS3 is for you – head to docs.load.network and start loading it up.