Beyond payments and DeFi, blockchains are exceptional at data provenance and storage.

Most chains evolved to optimize for compute, but specialized networks can solve the storage problem magnitudes more efficiently than traditional L1s.

Walrus and Load Network emerged to solve this challenge through polar opposite architectures: Walrus applies Byzantine fault tolerance to create a unified storage network adjacent to Sui, while Load S3 is a marketplace of storage providers connected to Arweave’s permanent layer and an EVM L1.

Each has different trade-offs while achieving similar ends: decentralized storage with cryptographic provenance.

In this article we cover:

- Overview

- Comparison notes

- Architecture

- Pricing

- Storage terms and flexibility

- Integrations and compatibility

- Use cases in the wild

What is Walrus?

Walrus is a storage network adjacent to Sui. Data is not stored directly on Sui, it’s stored on a separate incentivized layer with global BFT consensus. Storage nodes must all store the same data set to stay in consensus with each other or risk slashing penalties.

Walrus relies on Sui smart contracts for data access control, or ‘programmable storage’. Its Red Stuff encoding protocol is a new approach to erasure coding requiring 4.5x replication overhead but with robust redundancy and efficient recovery (nodes can recover lost data using only 1/n of the total network bandwidth rather than downloading the full blob). Storage terms in Walrus are paid upfront by epoch and can range between 2 weeks and 2 years, after which data must be renewed or it gets deleted.

What is Load Network?

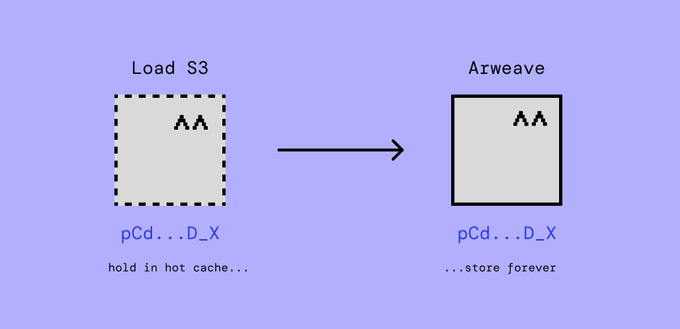

Load Network is a high-throughput EVM L1 with a native Arweave integration and S3-compatible temporary storage layer. Data can either be held in the HyperBEAM-powered Load S3 hot cache or committed permanently to Arweave. Data programmability comes from Load’s EVM smart contract layer and integration with AO.

Load’s S3 storage layer is marketplace-based rather than monolithic and does not rely on global consensus. Instead, storage providers (SPs) accrue reputation and boosted staking rewards for performance and replica count. Ingress protocols adjacent to Load S3 choose the best SP dynamically based on this reputation layer.

Storage terms in Load S3 can be held between one month to 5+ years in the hot cache, or data can be piped permanently to Arweave, retaining the same cryptographic provenance and ID across both layers.

A note on apples and apples

In this article, we’re comparing the storage capabilities of Load S3 and Walrus – 2 storage layers adjacent to their parent L1s.

Load’s parent L1(s): Load consists of both Load S3 and the Load EVM – 2 different data entrypoints with different guarantees, architectures, performance and payment structures. The Load EVM L1 is relevant as a compute-over-storage layer for Load Network – data settled to the Load L1 is permanent via Arweave; EVM rails are the differentiator to make adoption by L2s, DA layers and EVM dApps possible. Load S3 is a temporary storage layer, like Walrus, differentiated by its native Arweave integration and adjacency to AO, Arweave’s compute layer.

Walrus’ parent L1: Walrus does not store data on Sui, its parent L1, but does make use of its smart contracts for orchestration and access control. Every Walrus blob creates a transaction on the Sui L1 to register blob metadata, track storage commitments, and enforce access permissions. The actual data is stored off-chain across Walrus storage nodes using erasure coding, while Sui handles coordination, payments, and programmability.

Architecture: Monolith vs Marketplace

With BFT and shared state, Walrus is a monolithic storage network with global consensus. Load S3 is a distributed marketplace where storage providers compete. The marketplace is powered by an onchain reputation layer, and security is inherited from the AO network.

Walrus: global consensus

Walrus operates like a blockchain -- all 125 storage nodes participate in a single unified network.

When you upload a blob, the protocol decides where it goes. Your data gets encoded via Red Stuff, split into “slivers,” and distributed across the network according to stake weight. Every node must store their assigned portion of the global data set.

Coordination happens through the Sui blockchain. Storage operations reserve space, register blobs, and communicate availability. The nodes watch these transactions and update their state accordingly.

With BFT, up to 1/3 of nodes can be actively malicious or accidentally unavailable and the network still works. Cryptographic proofs and slashing penalties enforce honest behavior.

However, coordination creates overhead and actually puts a hard cap on throughput and network size. Since every storage operation touches the blockchain and every epoch transition requires network-wide coordination -- each epoch’s challenge involves 2f+1 nodes reaching agreement.

Walrus’s global consensus makes it powerful but constrained. The network’s write throughput is limited by metadata overhead and the Sui base layer – around 18 MB/s per client in testing. Whether adding more nodes to the network increases aggregate throughput depends on workload: multiple concurrent operations from different clients should benefit from more nodes, but single-blob operations remain constrained by the coordination protocol The network can scale to more data, but not necessarily faster operations.

Load: free market of SPs

In Load, each storage provider runs an ao-HyperBEAM node with their own S3-compatible infrastructure through a set of custom software packed into a HyperBEAM device. They set their own pricing, stake $LOAD tokens for the storage layer’s security, and an AO-based reputation layer tracks how reliably they serve data, how quickly they respond, whether they actually store what they claim.

Users pick which SPs to trust or outsource the routing to a Wayfinder-style protocol which chooses based on the onchain metrics which go into reputation calculation. There’s no global state that everyone must agree on. Each SP maintains its own atomic state - if an SP goes down, your data is only at risk if you choose not to replicate elsewhere. If an SP cheats, the reputation system tanks their ability to attract future business, and slashing hits their stake.

The key advantage over BFT is horizontal scalability. Every new SP adds capacity and throughput independently - no coordination overhead between SPs. Users route to whoever’s closest or fastest, and the network grows without consensus bottlenecks.

The disadvantage: less protocol-enforced security - fallback to free market mechanisms.

Load’s marketplace model scales differently to Walrus. Each SP operates independently. Adding SPs increases both capacity and aggregate throughput. Ten SPs means ten times the network capacity and potentially ten times the bandwidth if users distribute across them. There’s no consensus bottleneck because there’s no consensus required.

Load’s permanent storage via Arweave offers a third model: hundreds of miners storing data with economic incentives to maintain copies. No coordination between Load and Arweave is needed for this to work as data commits atomically with the same ANS-104 identifier working in both systems. Read more about xANS-104 data provenance and policies here.

Performance implications of network architecture

Each storage layer’s performance is inherited from its base network. Walrus technically depends on its own storage provider network and Sui. While the Load L1 can be used as an EVM-turing entrypoint to Arweave, Load S3 operates independently and depends on AO for security and coordination.

Let’s look at the metrics for each, including the Load EVM since it can be efficiently used for storage directly.

Walrus has a write bandwidth of roughly 18mb/s, inherited from the network’s consensus, metadata overhead, and dependency on Sui Network.

Load S3’s write bandwidth is constrained by the network speed of the individual storage provider – at the time of writing, average bandwidth is 1Gbps. The Load EVM’s throughput is 125MB/s, based on a gas-per-non-zero-byte of 8 and 1 gigagas-per-second threshold. Via large bundles, the Load EVM can handle transaction bundles up to 498GB, while Load S3’s theoretical maximum is capped at the capacity of the given storage provider. At the time of writing, that’s a capacity of 600 TB across 2 SPs

Pricing

For projects storing gigabytes or terabytes, the choice of storage layer can mean thousands or millions in costs over time.

Walrus pricing

Walrus charges in two components:

- Write (one-time): 20,000 FROST/MB

- Store (recurring): 11,000 FROST/MB/epoch

Note: 1 WAL = 1,000,000,000 FROST

Storage must be prepaid in epochs ranging from 2 weeks to 2 years maximum. After the prepaid period expires, data is deleted unless renewed. (There is no protocol-level mechanism for renewal.)

Load Network pricing

Load offers two pricing models depending on layer (temporary or permanent):

Load S3 (Temporary Storage):

Marketplace-based pricing set by individual storage providers, through competitive pressure between SPs. Specific rates depend on the chosen provider, with storage terms ranging from seconds to years. The anchor rate set by the SP run by Load Network is $0.015/GB/mo.

Arweave (Permanent Storage via Load):

One-time payment of [approximately $13.95 per GB](https://ar-fees.arweave.net) for permanent storage. No renewals, no expiration. Data persists indefinitely through Arweave’s endowment model and is accessible via 600+ gateways

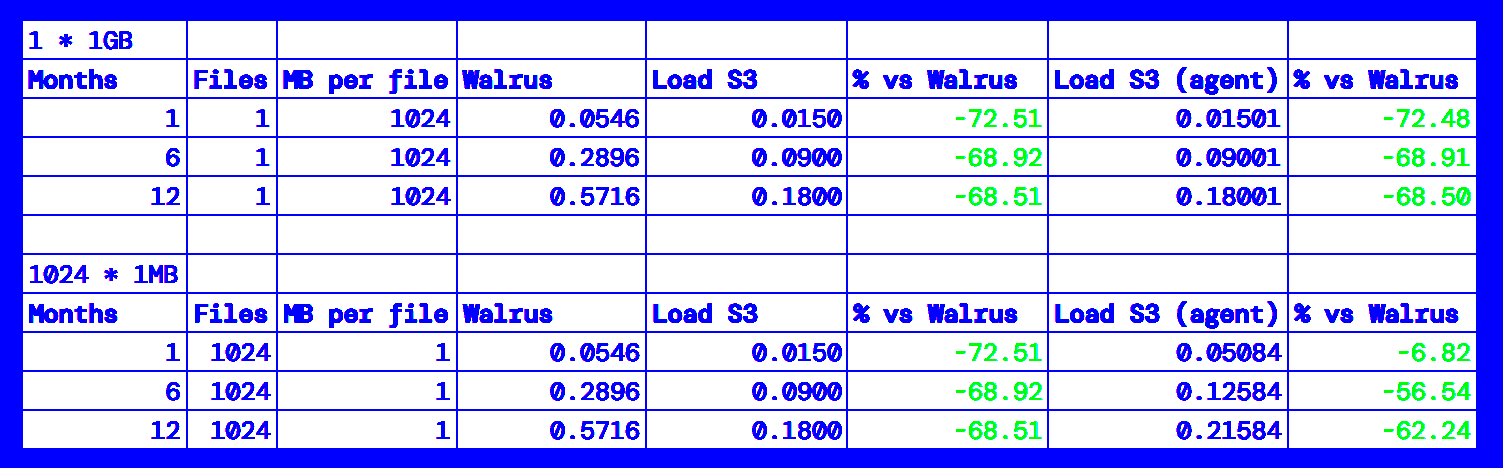

Cost over time

Here’s what 1 GB of storage costs across different timeframes, factoring in the s3-agent fees for cases where the user uploads ANS-104 DataItems directly.

* Load S3 pricing is marketplace-based and set by individual SPs.

** WAL price at time of writing: $0.38

The economics favor Load S3 for short-term hot storage, with the option to commit to Arweave for permanent archival when needed, retaining the same ANS-104 DataItem integrity and provenance across both systems.

The small file problem

Walrus’s pricing includes a hidden cost for small files. Each blob requires Merkle tree commitments for metadata, which can total up to 64 MB per blob (!!!) in the worst case.

Impact on a 1 MB file:

- Actual data: 1 MB

- Metadata overhead: ~64 MB

- You pay for: ~65 MB (65x multiplier)

- Combined with 4.5x encoding: ~290 MB total storage cost

Load’s model charges proportionally to file size with standard erasure coding overhead (~1.5-2x), regardless of file count. A 1 MB file costs storage for about 2 MB.

Operational costs beyond storage

Walrus additional costs:

- SUI gas for every blockchain transaction (register, certify, renew)

- Infrastructure to monitor expiration and execute renewals

- Maximum 2-year prepayment requires operational overhead for long-term storage

Load additional costs:

- $LOAD gas if storing via programmable EVM calldata

- Per-file ($0.00002) and per-MB ($0.000015) rates for optional s3-agent DataItem uploading

Pricing comparison by use case

Walrus is competitive for:

- Applications already built on Sui requiring tight integration

- Use cases where Byzantine fault tolerance is a strict requirement

Load is suited for:

- Cheaply storing ANS-104 dataitems in a hot cache indefinitely

- Multichain projects needing storage across ecosystems (AR/ETH/SOL dataitem signing)

- Teams wanting to avoid operational overhead of renewal management (indefinite terms, Arweave option)

- Teams wanting to migrate from web2 to web3 storage with near-zero migration overhead

Storage terms and flexibility

Walrus: fixed terms, temporary storage

Walrus storage is prepaid in 2-week epochs. Minimum term: 2 weeks. Maximum: approximately 2 years (104 weeks).

After your prepaid period expires, data gets deleted unless manually renewed before expiration. For long-lived applications, this means custom renewal infra: monitoring expirations, maintaining token balances, handling transaction failures, alerting on issues. Every project using Walrus long-term needs to solve this problem independently because there is no protocol-level mechanism for it.

Load: flexible terms, optional permanence

Load S3 technically has no fixed term structure but given the monthly pricing most SPs will set a 1 month minimum. Users storing on providers set their own terms when they upload, ranging from seconds to years. Load S3 inherits S3’s expiry rules.

But here’s where it gets interesting: xANS-104 state transitions. Every object stored in Load S3 is a cryptographically signed ANS-104 dataitem. This gives you three lifecycle paths:

- Temporal (tANS-104): Store temporarily on Load S3, delete when done

- Void (∅ANS-104): Let it expire or explicitly delete

- Permanent (∞ANS-104): Commit to Arweave, same ID

The ANS-104 signature remains constant across all states. Your workflow looks like:

- Upload to Load S3 (fast, S3-compatible, Turbo-compatible flexible terms)

- Serve from S3 or Arweave-compatible gateway with low latency

- Optionally: commit to Arweave for permanence (one transaction)

Load S3 allows users to upgrade data from temporary to permanent storage without any migration complexity - just a state transition from t to ∞ with a single API call. Same cryptographic identifier, and the provenance remains intact.

Integrations and compatibility

Walrus

Walrus’s integration layer centers on Sui blockchain connectivity and emerging compatibility standards.

Sui smart contracts

Storage resources and blob metadata exist as Sui objects, allowing smart contracts to check blob availability, extend storage lifetimes, or delete data. This enables programmable storage logic - for example, an NFT minting contract can verify the artwork is available on Walrus before minting.

Seal

Walrus integrates with Seal, an access control layer that enables encryption and permissioned access to stored blobs. While Walrus storage is public by default, Seal adds confidentiality through client-side encryption and key management, allowing private data storage use cases.

CDN and caching layer

Walrus supports integration with traditional content delivery networks and HTTP caches through aggregator and publisher nodes. This brings decentralized storage into compatibility with existing web infrastructure while maintaining the option for fully decentralized access.

Developer tooling

CLI, JSON API, and HTTP API for storage operations. SDKs for programmatic access. Browser-based tools for managing storage without running local infrastructure.

Walrus is tightly coupled to Sui - if you’re building on Sui, Walrus provides native storage primitives. Outside Sui’s ecosystem, integration requires bridging through Walrus’s HTTP layer or custom infrastructure to handle the Sui transactions.

Load Network

Load is tightly integrated with Arweave’s storage and compute layers alongside the EVM tooling ecosystem. With both traditional HTTP support and web3 wallet integrations, Load’s emphasis is on meeting developers in the stack they already use.

Arweave, via ANS-104 and Reth ExExes

Load has a native integration with Arweave’s permanent storage layer using the ANS-104 DataItem standard. Data stored in Load S3 can transition to Arweave permanence while maintaining the same cryptographic identifier and provenance, enabling upgrades from temporary to permanent storage.

Every Load S3 storage node runs an Arweave-like gateway which resolves offchain ANS-104 DataItems in the same way that a gateway like arweave.net resolves onchain data.

DataItems aside, every transaction that flows through the Load EVM L1 is permanently stored on Arweave, making permanent storage a side effect to any compute operation and reducing reliance on archive nodes.

AO (Arweave’s hyperparallel computer)

Load integrates with AO for advanced data programmability. This allows smart contracts to interact with data across both Load and Arweave, enabling use cases that aren’t possible on traditional blockchains, like GPU compute and data-intensive processing. The Load storage network will use AO for both security and computation.

Turbo (responsible for 90%+ of Arweave data ingress)

To stay 1:1 compatible with the Arweave ecosystem, Load S3 storage is accessible via AR.IO’s Turbo uploader. The loaded-turbo-api is the first Turbo-compliant, offchain, S3-based, on-HyperBEAM upload service. With this new upload service, Turbo and the broader Arweave ecosystem users can use Load S3 temporary storage directly via the official Turbo SDK with a simple one line endpoint change.

S3 compatibility

Full compatibility with the AWS S3 API means existing tooling, SDKs, and applications work without modification. Developers can use standard S3 clients, backup tools, and workflows while storing data on decentralized infrastructure.

EVM assets via Hyperlane

Cross-chain messaging protocol integration enables asset bridging and data access across multiple blockchain ecosystems, making Load storage available to applications on any Hyperlane-connected chain.

EVM developer tooling

As an EVM L1, Load natively supports the entire Ethereum toolchain - Metamask, Hardhat, Foundry, ethers.js, web3.js - and can interoperate with any EVM-compatible chain through standard bridging protocols.

Load is built for integration in your existing stack - composability across web2 and onchain ecosystems rather than tight coupling to a single chain. Storage works either onchain or independently, with optional programmability when needed.

Use cases in the wild

Walrus

NFTs

Both leading NFT projects like Pudgy Penguins and Claynosaurz, and niche Sui-ecosystem launches, use Walrus for asset and metadata storage.

Media and docs

Blockchain news sites like Decrypt and Unchained use Walrus for media storage, and Walrus Sites hosts a variety of frontends for dApps in the Sui ecosystem and the Walrus docs themselves.

Personal file storage

Apps like Tusky offer a user-friendly alternative for everyday people looking to store files in a Google Drive-like UI. Note: comparison between Load Cloud and Tusky coming soon.

Load Network

Blob and ledger storage

Chains like Metis, Avalanche and RSS3 use Load Network to store complete replicas of onchain history, with Load acting like a decentralized archive node. Blobscan, EigenDA and Dymension store blobs on Load Network to preserve them even after their source purges them following the biweekly challenge period. As well as being generally compatible with EVM stacks, Load is OP-Stack compatible, making it easy to drop in for DA and storage.

Decentralized publishing & art

Onchain blog hosting platforms like fairytale.sh and podcast hosts like permacast.app free creators from dependency on rent-seeking web2 hosts. Clubs like MEDIADAO incentivize archivists to preserve important media onchain permanently, and tools such as shortcuts.bot provide domain names for content stored on Load.

Platforms like OLTA use Load S3’s low-latency, Arweave-adjacent storage to store continuous live feeds of the state of interactive artworks, allowing state to be periodically submitted to Arweave and AO without L1 bottlenecks.

Personal cloud (with developer tooling)

The Load Cloud Platform provides a friendly UI for private S3 object storage. Files are stored as ANS-104 DataItems which can be published to Arweave permanently at any time. The platform also offers API access, allowing Load S3 to be used with the official AWS S3 SDK.

DeFi use cases

Since the core of Load Network is an EVM L1, Load supports DeFi applications like tapestry.fi (DEX), naufi.xyz (data-storage-pegged stablecoin), Astro (USD stablecoin), and Hyperlane for crosschain asset bridging. This gives financial power to data and enables richer use cases than just storage.

Wrapping up: key differentiators

Architecture & security

Walrus uses BFT global consensus with 125 nodes maintaining shared state and slashing penalties – protocol-enforced security but with coordination overhead that caps throughput at ~18 MB/s per client.

Load uses a marketplace model where storage providers compete independently – horizontal scalability without consensus bottlenecks (1 Gbps per SP, ~125 MB/s via EVM), but security relies on reputation and staking rather than protocol guarantees.

Pricing & economics

Walrus charges fixed rates in FROST tokens (20,000 FROST/MB write + 11,000 FROST/MB/epoch storage) with significant metadata overhead for small files (up to 64 MB per blob).

Load has marketplace-based pricing (~$0.015/GB/mo anchor rate) with proportional costs regardless of file size, plus optional one-time permanent storage via Arweave ($13.95/GB).

Storage terms & flexibility

Walrus requires prepaid 2-week to 2-year terms with manual renewal infrastructure – data gets deleted after expiration unless renewed (no protocol-level renewal mechanism).

Load offers flexible terms (seconds to years) with optional state transitions to permanent Arweave storage – same ANS-104 identifier across temporary and permanent states, no migration complexity.

Integrations & compatibility

Walrus is tightly coupled to Sui – native integration with Sui smart contracts for programmable storage, but requires bridging infrastructure for use outside Sui ecosystem.

Load is ecosystem-agnostic – full S3 API compatibility, native EVM tooling support, Arweave/AO integration, Turbo SDK compatibility, and works with existing web2 workflows without modification.

Operational overhead

Walrus requires ongoing maintenance – monitoring expirations, managing token balances, handling renewal transactions, and maintaining custom infrastructure for long-term storage.

Load minimizes operational burden – no forced renewals, optional permanence upgrade with one API call, S3-standard expiry rules, and familiar tooling for developers migrating from web2.

Get started with Load S3 with a storage grant of up to 100GB/mo here.