Today we are pleased to announce the first ever S3 compliant API using Arweave as the underlying storage network. With this new Load S3 API release, users will be able to use Arweave, via the ar.io Turbo bundling service, for S3’s bucket-object storage.

The exact same developer experience you’re used to in web2, with the power of permanence.

Let’s look at how it works under the hood, why we built it, and how to get started.

A few words about S3 and Load’s S3 evolution

Amazon Simple Storage Service (Amazon S3) is an object storage service that has become the default choice for centralized storage. Over 4 million AWS users have stored more than 350 trillion objects using Amazon S3 for use cases such as data lakes, websites, mobile applications, backup and restore, archive, enterprise applications, IoT devices, and big data analytics.

AWS itself controls around a third of the total cloud market, which makes the S3 framework one of the most well-known storage paradigms.

S3 != AWS

S3 is a protocol made popular by AWS, but it has many neutral open source clients like Ceph and Minio that use the exact same bucket-object architecture.

Almost every developer has used S3, or has at least heard about it – and that’s more than enough reason for us to build an S3 compliant API that interfaces with Load Network and Arweave. Why? To meet users where they are. More web2 data pipelines, easier developer experience.

The path from load0

We started the Load S3 client on top of load0 storage (EVM bundles), which settles data to Load Network L1, and then the L1 settles the data to Arweave.

This long pipeline of data bundling, compression, serialization, eventual settlement on Arweave has pros and cons. The pros: object storage on Load Network as a first-class citizen, paired with EVM programmability and permanent storage guarantees. The cons: decoding. On the Arweave side, with the Load’s final data format, it’s a complex process to decode the object back to its raw data, adding computational overhead and latency to the process. (it’s possible but complex, we are working on a solution!).

load0 -> S3 + Turbo

When thinking about how to extend the usefulness of load0, we asked ourselves: why not make our S3 API able to post data directly to both Load and Arweave? It’s the shortest solution, with great added value in the Arweave ecosystem.

It uses Turbo, a service built by the ar.io team that makes it simple to pay for Arweave storage and ensure it is quickly uploaded and indexed. From a UX perspective, speed is vital to the Load S3 integration, but on our side it is also vital to be able to abstract the AR payment to an API key which works exactly like any web2 developer would expect to authenticate their application and pay for storage.

With Turbo integrated into this new S3 client upgrade, cloud.load.network users can use S3 object storage with 2 different storage providers:

- load0: EVM programmability paired with Arweave permanent storage.

- ANS-104 dataitems (Turbo): Direct Arweave permanent storage.

Load Network Cloud Platform - Arweave S3

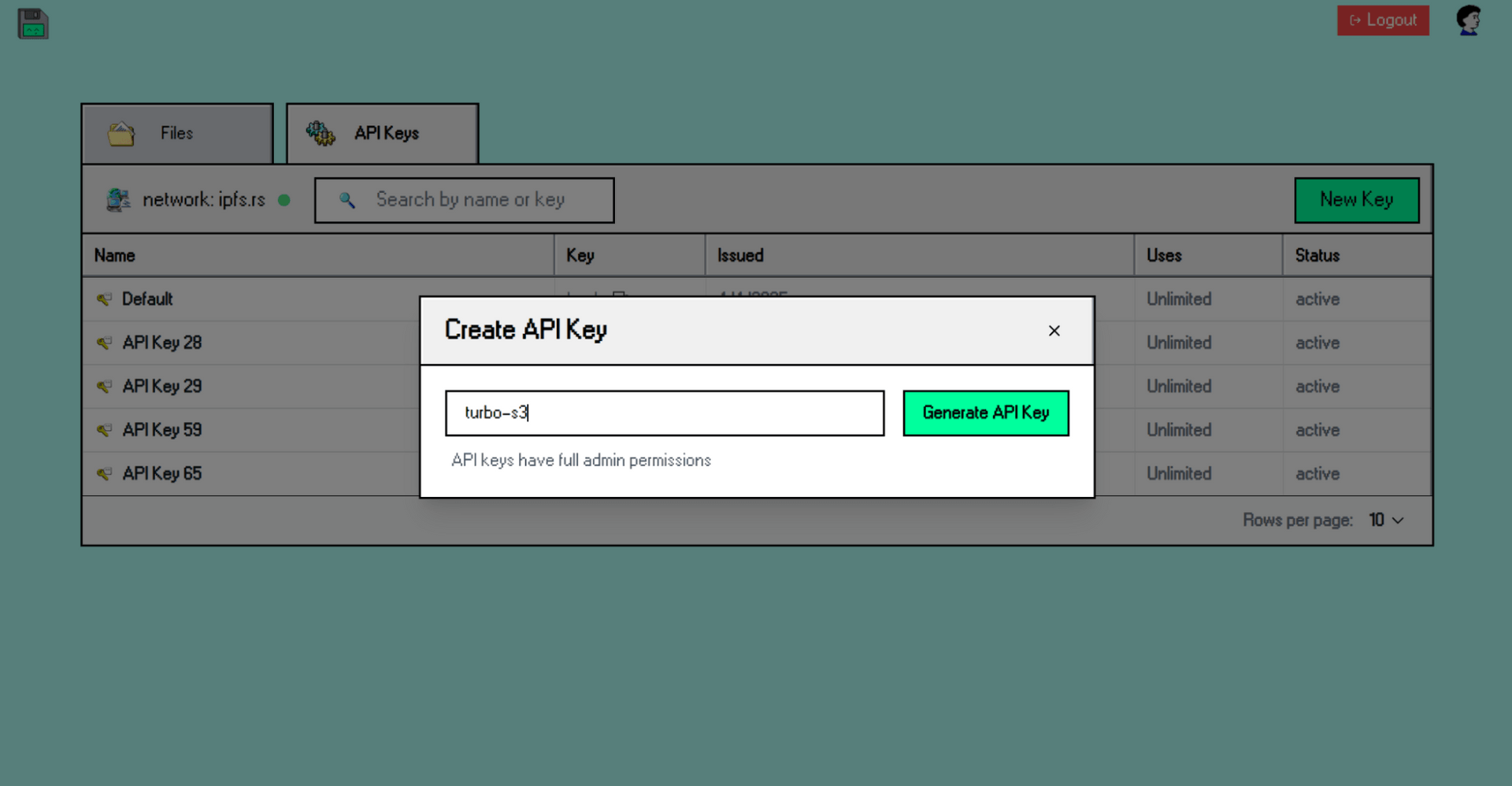

In the current MVP release, users can use the Arweave-powered S3 client by creating load_acc API keys from cloud.load.network – this release will subsidize the Arweave upload fees, with each object limited to 25 MB.

In the very near future, we will be integrating an ao-powered credits system for Load Cloud (LNCP), users will be able to purchase cloud credits using ao network tokens, primarily using $AO.

Use Arweave S3

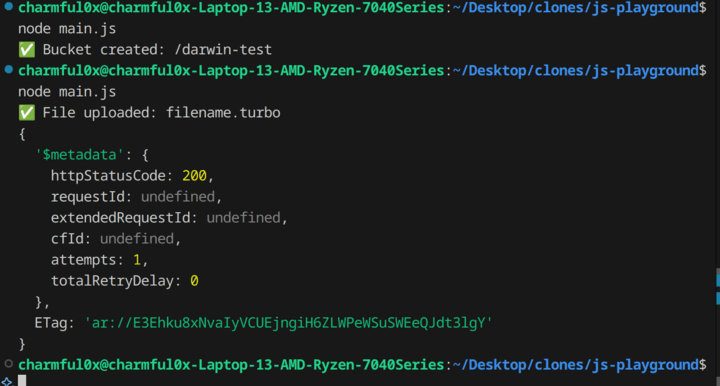

All you need to start using the Arweave-powered S3 data pipeline (Turbo Arweave) is a load_acc API key and an AWS S3 SDK, and the rest works out of the box. Here’s an example - putting an object in an existing bucket:

import { S3Client, PutObjectCommand } from "@aws-sdk/client-s3";

async function uploadFile(bucketName) {

const key = "hello.txt"; // The name (key) the file will be stored under (object name)

const fileContent = new TextEncoder().encode(“hello world from load s3”); // Example file content in bytes

const accessKeyId = process.env.LOAD_ACCESS_KEY;

const secretAccessKey = ""; /// meant to be empty

const s3Client = new S3Client({

region: "eu-west-2",

endpoint: "https://s3.load.rs",

credentials: {

accessKeyId: accessKeyId,

secretAccessKey: secretAccessKey,

},

forcePathStyle: true,

});

try {

const command = new PutObjectCommand({

Bucket: bucketName,

Key: key,

Body: fileContent,

Metadata: {

"uploader-api": "turbo", // to push object to Arweave

},

});

const result = await s3Client.send(command);

console.log("✅ File uploaded:", key);

console.log(result);

return result;

} catch (error) {

console.error("❌ Error uploading file:", error);

}

}

uploadFile("your-bucket-name");For more examples, check out this repository

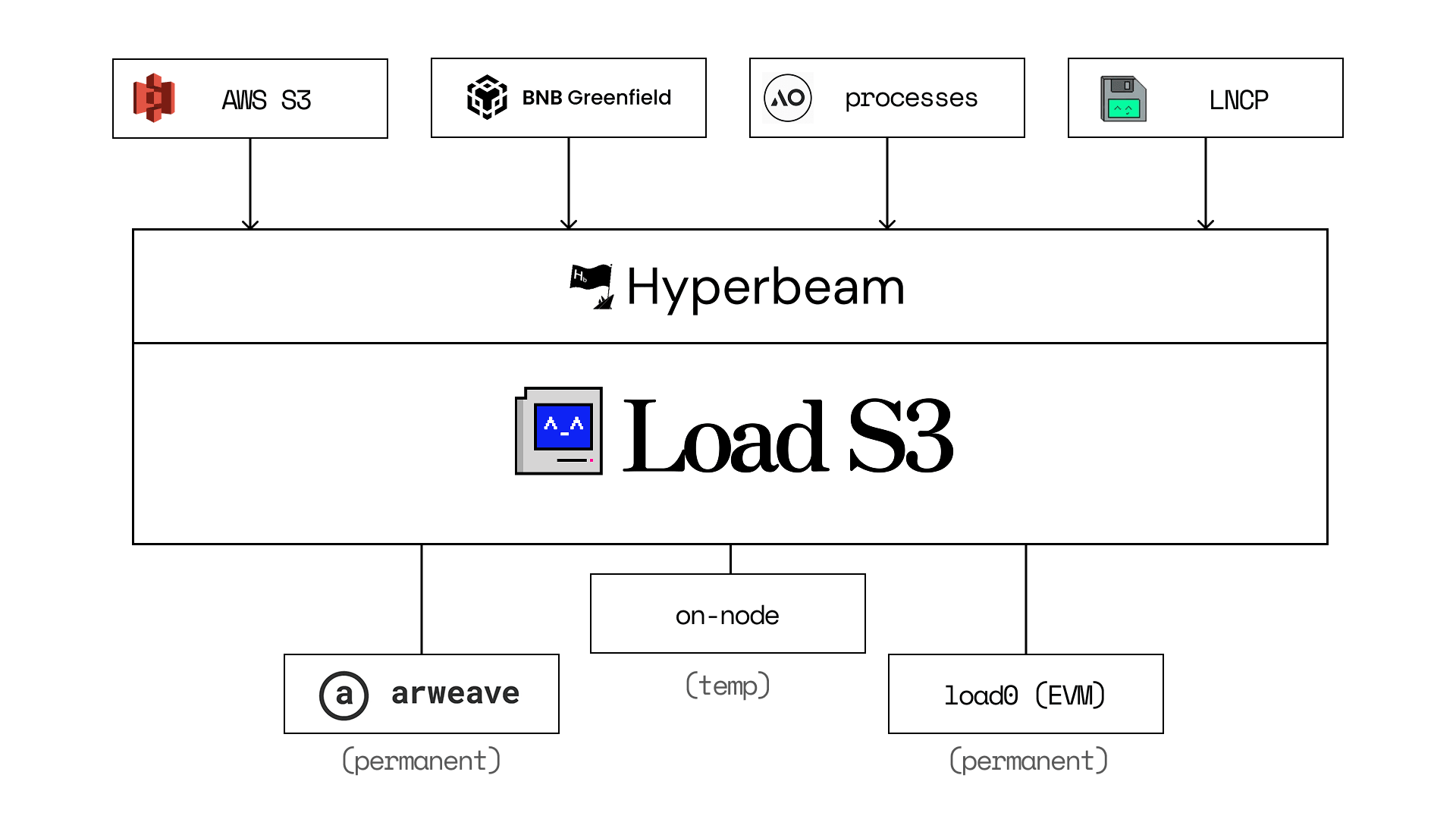

Load S3 as a storage provider (alpha drop)

S3 compatibility, smart standards and a modular design makes it possible to plug Load S3 in as a storage provider in other networks.

Dropping alpha here: given its architecture as a modular storage provider, the next Load S3 release will be supporting HyperBEAM-powered temporary storage, launched with a client capacity of ~300TB, and 7.7x cheaper than AWS S3.

Notably, this will allow platforms like BNB Greenfield to use Load S3 as Greenfield’s route to Arweave permanence. Greenfield’s has grown over 10x this year to a total network size of 124 TB across 8 providers. Load S3 will be the first provider in the network with native Arweave functionality, enabling Greenfield users to smoothly switch their data to immutable mode.

Commit by commit, release by release, we are getting closer to our ultimate goal: the onchain data center. We are focusing on creating tools, frameworks and protocols to onboard more data into Arweave.